- within Technology topic(s)

- within Transport topic(s)

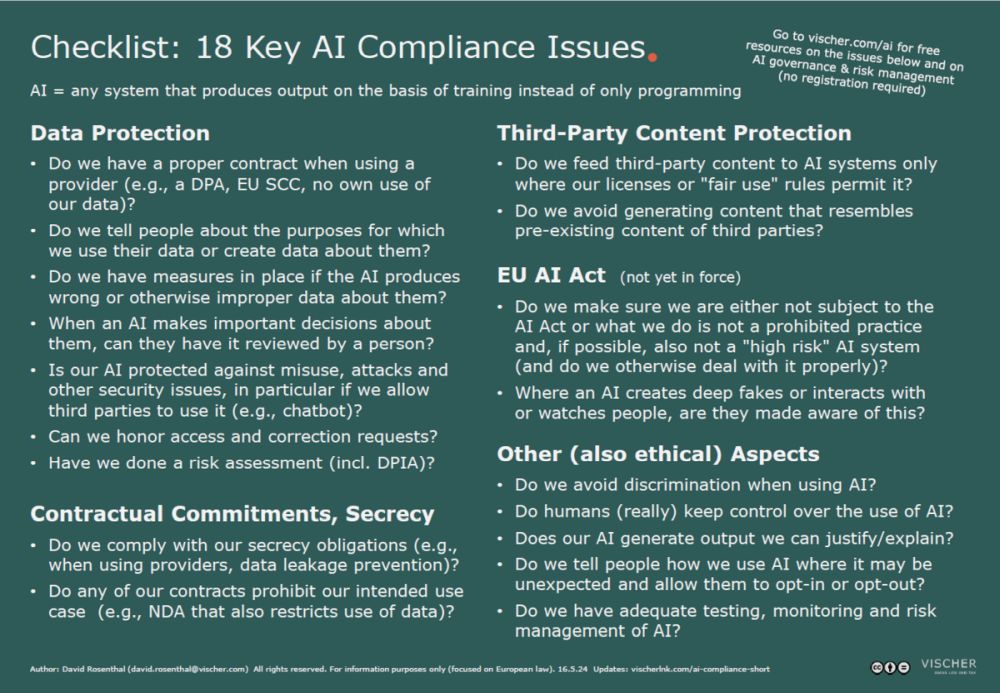

The use of artificial intelligence raises numerous compliance issues. We think of data protection, copyright and the AI Act, as well as other topics such as non-discrimination and explainability. We have summarised the 18 most important points on one page for part 18 of our AI blog series.

In practice, when we are dealing with a technical discipline such as artificial intelligence (AI), which offers many possible applications, the legal issues are numerous, too. It does not come as a surprise that many people who are responsible for compliance in the corporate environment wish for some guidance as to what they should be focussing on. We have compiled 18 key questions on a one-pager (German, English that should be asked when assessing an AI tool or an AI project for compliance purposes (at least in Europe):

These questions are divided into five areas and we have published further material on most of them:

- Data protection: This is undoubtedly themost important area in practice and also the one that requires the most work in terms of compliance. In our experience, the focus here is on checking the contracts with the providers (which most projects rely on). We have already published a checklist in our blog post no. 15. In practice, it is primarily a matter of concluding a data processing agreement in accordance with the legal requirements (if necessary with the provisions for international data transfers) that also ensures that the provider does not use your own data for its own purposes. Our blog post no. 2 has analysed the offerings of the three most important providers. In second place is the creation of the necessary transparency; we also provided information on this in blog post no. 16 and wrote a sample AI declaration. In third place is the responsible handling of AI generated output, which primarily involves a critical review of the accuracy, completeness and appropriateness with regard to its purpose. This should be taken into account in internal instructions and training. We have published a sample video in several languages in blog post no. 1 and provided sample instructions in blog post no. 3. On the subject of risk assessment, we refer you to our blog post no. 4 (where we also present a free tool for this purpose) and as far as security is concerned, we have explained key AI-specific risks in blog post no. 6. We will discuss how data subject rights can be fulfilled in a future blog, but perhaps our blog post no. 17, where we describe in detail what is actually involved in a large language model, will be helpful for now. As far as automated individual decisions by AI are concerned, we will also look at this in more detail in an upcoming blog post; they are still rather rare in practice, but are regulated by data protection law.

- Contractual obligations, confidentiality: Thefocus here is on any confidentiality obligations and restrictions on use. In the B2B context, many companies today sign non-disclosure agreements that not only restrict or even prohibit the disclosure of information from customers, partners, etc., but also their use for purposes other than for the purposes of the contract. This can, for example, prevent it from being used for the development of own AI models. An NDA will not normally prohibit the use of AI, even with the involvement of providers, provided that the provider itself is subject to an appropriate confidentiality obligation (which is unfortunately not yet a matter of course). However, if data is used that is subject to classical official and professional secrecy (e.g. by banks, hospitals, public administration, lawyers), the involvement of a provider requires special contractual conditions and protective measures, which are still a challenge for many AI providers (companies therefore prefer established cloud solutions such as Microsoft). See the above-mentioned blog post no. 15 with our checklist. Contracts can also contain restrictions on what can be done with the content of a counterparty with regard to copyright (see next point).

- Protection of third-party content: This generally concerns copyrights and, to a lesser extent, industrial property rights and legal provisions that restrict the use of third-party content even if it is not protected by copyright. On the one hand, care must be taken to ensure that AI tools and applications are not fed with content for which the company does not have the necessary rights or that the law permits use without the consent of the rights holder (in the US, such use is referred to as "fair use", which is why we have chosen the term for our one pager; in Europe, we have a similar, but more strictly regulated concept). On the other hand, it is important to ensure that the AI does not produce content that infringes third-party rights. There are certain strategies for this, whereby common sense usually helps a lot. We have covered the strategies in our blog post no. 14, also with a checklist and further explanations on copyright law and generative AI.

- EU AI Act: The EU's new AI regulation is already on everyone's lips even before it has come into force. Companies have already started to prepare for it – not only in the EU, as it also claims extraterritorial application in certain cases. Yet, the AI Act is not a general AI regulation, as is often assumed, but basically a product safety regulation. In practice, the main effort necessary here will be to check for each application (including the existing ones) whether AI is used and whether it is covered by the AI Act at all (AI is essentially any system that not only fulfils its task on the basis of the fixed instructions of program code, but does so at least partially on the basis of a training). In any case, most companies will try to avoid entering the area of "high-risk" AI systems under the AI Act, because providers in particular have to fulfil considerable additional requirements for such systems. In the case of non-critical AI applications, the AI Act primarily provides for transparency obligations, which most companies will be able to fulfil quite easily. We have commented in detail on the AI Act and its scope of application in our blog post no. 7. We have also created a practical overview of the various use cases, which anyone can use to quickly check whether their use case is critical or even prohibited. If you would like to do this electronically, we recommend the "AI Act Checker" in our electronic GenAI risk assessment tool GAIRA, which is available free of charge. GAIRA, including the AI Act Checker, can also be used internally as part of compliance checks, as more and more companies are doing. Pro memoria: In addition to the EU's AI Act, there are of course a growing number of jurisdictions in which separate AI laws are enacted that either regulate AI in general or only certain aspects (such as AI-supported decisions or the protection of personality rights).

- Other aspects: In addition to the classic and purely legal requirements, there are also a number of other aspects that companies typically want to take into account as part of compliance, although not always all of them, which we have listed on our one-pager. Swiss law, for example, does not general prohibit discrimination in the private sector, but many companies have at least set themselves the goal of avoiding discriminatory AI – and certain regulators, such as the Swiss Financial Market Supervisory Authority FINMA, even make it a de facto obligation for supervised institutions (we gave our initial thoughts on AI in the financial market sector in blog post no. 8. We have summarised the most important of these points on our one-pager. We will go into some of these points separately, such as the concept of the "explainability" of AI results, as many people find it difficult to visualise what this means in concrete terms (here again we refer to blog post no. 17 on the question of what is contained in an AI model). On the issue of transparency, we refer you to blog post no. 16. Further points can be found in our 11 principles for the responsible use of AI, which we discussed in our blog post no. 3.

We have deliberately not covered AI governance in our one-pager, with the exception of the reference to appropriate testing, monitoring and risk management. However, companies must also consider the means by which they want to ensure that the compliance objectives (and other objectives of using AI) will be achieved. We have described the basic structures of AI governance in blog post no. 5. More will follow here.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.