- within Technology topic(s)

- in South America

- within Food, Drugs, Healthcare, Life Sciences, Government, Public Sector and Transport topic(s)

We regularly receive enquiries from small and medium-sized companies that want to use artificial intelligence (AI) but are unsure how to do so correctly from a legal perspective. We are dedicating this blog post no. 27 of our series on the responsible use of AI in companies to them. Larger companies may also find some of our tips helpful.

Firstly, a basic attitude needs to be clarified: We believe that companies should make it as easy as possible for their employees to use AI. This is not because we think AI is a "cool" thing. Nor is it because we always find AI useful; we don't think it always is. However, companies should realise two points:

- No shadow AI: Employees use AI anyway when they feel like it - and if the company does not provide the tools, they simply use their private AI tools. This means that the company loses control of its data and everything else from the outset.

- Create AI expertise: Many companies will

sooner or later be dependent on their employees having what is now

referred to as "AI literacy", and they will only gain

this if they can at least partially acquire and deepen it in their

day-to-day work.

Step 1: Acquire the right tools

In small and medium-sized companies, the use of AI usually means that appropriate tools are procured and made available. We are talking in particular about generative AI, i.e. services such as ChatGPT, Copilot, Gemini or Perplexity, but also audio, video and image-orientated services. A lot can be done with them in everyday life if employees don't have to be afraid of using these services with company data.

It gets even cheaper and potentially also easier if the so-called API access is used with the right tools;the major cloud AI providers such as OpenAI, Microsoft or Google offer such access. Using AI via API means that the login via browser or service app is not needed anymore. Instead, a separate tool is used that accesses the service via a special access for developers and major customers, the Application Programming Interface or "API" for short. Many (but not all) AI providers offer such access. We use AI in this way with our free tool "Red Ink" and with the free chat solution "Open WebUI". Our tool gives us more features within Office than Copilot and is easier to use, and Open WebUI gives us access to various models from different providers within one chat environment – all at much lower costs.

Note that in principle, it is also possible as a company to run your own AI model on your own server. In our experience, however, this is no option for many smaller and medium sized companies because it requires corresponding technical know-how and investments (in the hardware) that many will not have or be willing to undertake when starting to use AI. Also keeping up with model developments is not always easy, even though there are a number of open-source large language models that are available for free.

The challenge when using such tools is to find the right subscription and the right contract. Unfortunately, still many providers - including major players such as Microsoft - are not entirely clear and transparent on the applicable terms, keep changing their contracts and product names and do not necessarily offer fully compliant solutions. Microsoft, for example, has incomprehensibly still not managed to offer a version of its "Copilot M365" product that can be used in a corporate context with personal data in a manner which is fully compliant with data protection requirements (we still recommend disabling the "web search" function, because it is not subject to a data processing agreement).

When contracting with an AI provider, make sure that the contract includes:

- a data processing agreement (DPA) that applies to all functions (with the necessary additions for your own country, e.g. for Switzerland or the UK);

- an obligation to maintain the confidentiality of customer data;

- an undertaking that the customer's own content will not be used for the provider's own purposes such as AI training;

- that the generated outputs can be used freely (i.e. no additional licences have to be paid for and there are no restrictions on use that do not suit the company).

These points are often met if the company subscribes to the corporate and not the consumer versions of the services in question. Although they are generally more expensive, only they offer the necessary contracts - if at all (for instance, even some corporate contracts lack a proper confidentiality undertaking on the part of the provider). Anyone who has a contract as laid out above can, from a legal perspective, in principle use these services also for personal data and "normal" confidential data.

A risk decision is undoubtedly necessary here, but companies should ultimately be aware of this: If employees have to think twice every time they use a document to see if it works, then usage will not get off the ground in using AI. It is therefore worth procuring the right contracts and checking whether a company really has data and content that cannot be processed with AI as an "assistant" for all the usual day-to-day tasks. Most companies will hardly ever have such data and content, or will not use AI for things that are problematic in this respect. For example, most companies will be well served by the large off-the-shelf language models and will not need to do their own fine-tuning or training.

Anyone who is subject to professional or official secrecy, such as law firms, medical practices or public administrations, must fulfil additional requirements, which we will not go into here. We have done this in our firm, for example, and can use AI with all data on a day-to-day basis; this was an important step in the effective use of AI.

To provide some guidance when making your first selection, we have created an overview of the most common AI offerings. This can be found in blog no. 25 and here. Anyone who is unsure about a specific contract should consult an experienced legal advisor. The tools must also be configured correctly.

The costs should not be neglected either: these can quickly add up with subscriptions; prices of CHF/EUR 15-40 per month and employee for each service are quite common for general purpose AI services. Use via API is usually much cheaper. Here the prices are CHF/EUR 0.5-2 per million tokens of input (= plus/minus words, partial words and punctuation marks) and typically one to four times as much for output tokens. However, this is constantly changing and also depends on the geographical location of the server. European API locations are more expensive than those in the USA.

Don't forget: Companies should appoint an owner for each tool and solution that they purchase for productive use, i.e. a person who is personally responsible for the contracts and other aspects of compliance, including defining usage guidelines. This ensures that preconditions are checked or that it is at least clear who is responsible if this does not happen. The decision to authorise a product does not lie with the specialist body, but with the "business" - the specialist bodies only provide advice and point out the risks, which of course always exist.

Step 2: Issue a usage policy

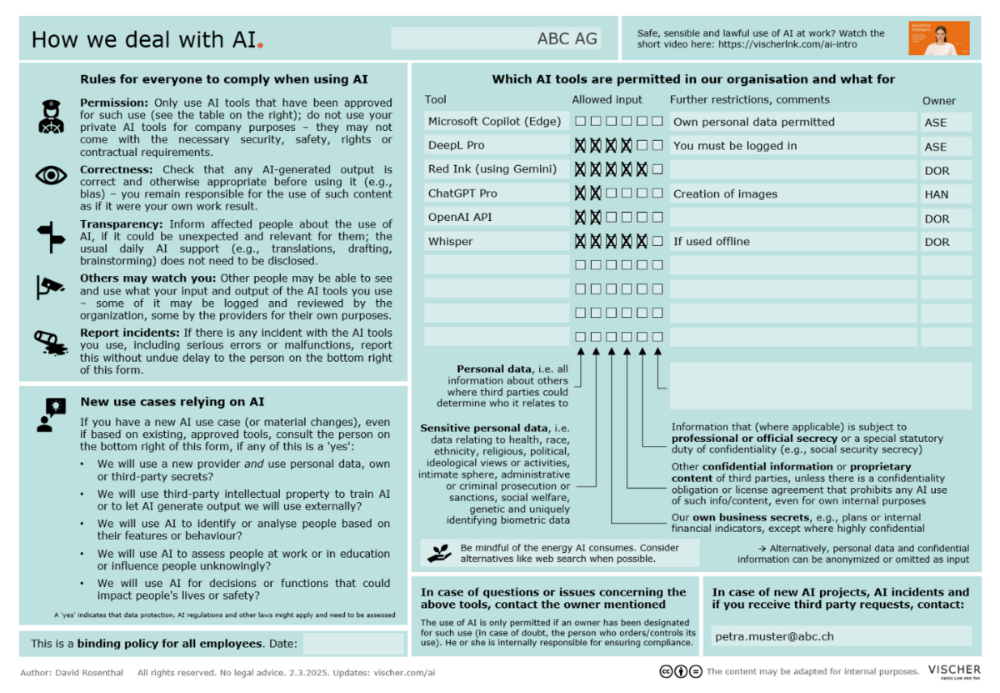

Small and medium-sized companies should also instruct their employees which AI tools they are allowed to use and how. Last year, we published a single-page template for an AI usage policy that can be used free of charge and can also be converted into your own format. We revised this policy template at the beginning of this year and it is also available free of charge in German and English.

Its key content:

- Use of AI applications: It specifies which AI applications may be used and how. For each application, it is possible to specify which data categories and other content are permitted as input - as this is essentially what matters at tool level. It is also specified which restrictions apply (e.g. that a tool such as "DeepL Pro" may only be used if the employee has logged in, as otherwise the contractual protection provisions do not apply). However, the respective "owner" of the tool is also stated, i.e. the person in the company who is responsible for ensuring that these specifications and contracts with the provider are appropriate and that the legal framework conditions are also complied with and risks are acceptable.

- Basic rules for the use of AI: The policy lists the most important basic rules that employees should follow when using AI. Compared to the previous version, we have broken these down to what applies to "normal" employees: using AI applications only with authorisation, checking the results for accuracy, ensuring the (necessary) transparency and reporting incidents. We would also like to point out that the use of AI applications can be recorded and analysed - a fact of which many are unaware.

- Trigger questions for AI applications that need a closer look: To ensure that any new AI application or any other legally or reputationally problematic use of AI is identified early on, we have formulated five trigger questions that even inexperienced employees can use to recognise whether an AI application is potentially problematic and requires closer examination, for example with regard to data protection, intellectual property and confidentiality (more on this in our blog no. 18). However, this also covers the "prohibited" and "high-risk" cases of the EU AI Act, insofar as this will be relevant for a company (see blog no. 7, the article by David Rosenthal and this overview). In these cases, the person who wants to introduce such an AI application is obliged to contact the person responsible for reviewing new applications; this should typically be someone internally who at least serves as a point of contact and knows where to obtain the necessary external advice. It is also stipulated that AI applications may only be used if there is an owner responsible for them.

With this regulation, a company has a simple minimum regulation and governance for ensuring the legal requirements and managing the risks associated with AI applications for the company. The major challenge in practice is the above-mentioned legal review of the contracts of the tool providers and other legal requirements. To this end, we work with our clients using checklists to assess tools for compliance with these requirements; this is not rocket science. In most cases, it is done by the internal data protection office, or external legal advice is sought where companies feel unsure.

We have summarised the 18 most important compliance issues when using AI on a single sheet (blog no. 18), and most of them have to do with data protection. However, experience also shows that they can usually be resolved easily. For example, the use of AI assistants for everyday tasks is now generally considered unproblematic; gut feeling is usually a good indicator of whether an application is problematic - in conjunction with the trigger questions mentioned above.

For a risk assessment of AI applications in the field of generative AI, we have developed our "GAIRA" tool, which is available free of charge here and is already being used by many companies. For most projects, it is sufficient to fill out the "GAIRA Light" form; even just the act of filling it out leads to the respective owner of the tool or solution asking themselves the most important questions. While they may not be able to answer all of them, they will have already gained a better understanding of the issues and risks.

Step 3: Training and transparency

The third step is to publicise what the company wants to use in terms of AI, both internally and externally, and to communicate this in the necessary depth.

As a rule, not much needs to be done externally. Current law already requires that the use of AI is made transparent, to put it simply, if it is used to process data of individual, recognisable persons who do not expect it. In the case of everyday AI tools, at least the last criterion will often no longer represent an issue because the use of AI is becoming increasingly "normal" so that people expect that their data will be used with AI. You may still have to mention it in the privacy notice, but you won't have to display it prominently.

However, we recommend that small and medium-sized companies also read through their privacy notice and ensure that

- for the sake of simplicity, it is mentioned that personal data can go to service providers located in any country in the world (if this is not already stated);

- the purposes for which AI with personal data is used are listed (if AI is only used for what was previously done without AI, no addition is needed here; an addition is needed if something new is done, e.g. if data is used for training AI);

- to be on the safe side, it is stated that personal data can not only be collected from social media or elsewhere from the internet (as is the case with every company), but also generated using AI (e.g. when a job application is analysed using AI and it generates a report). More on the requirements of transparency in our blog no. 16.

Internally, it must be ensured that employees know how to deal with AI, what AI can and cannot do and what risks are associated with it. The AI policy can set out everything in words and symbols, but this alone is usually not enough. In our experience, ensuring that employees learn how to use AI efficiently, safely and effectively is even more important than ensuring compliance in all details. This goes beyond workshops for prompting and the like but does not necessarily require external training courses. It may be sufficient to identify those people in the company ("power users") who have slightly more AI expertise than their colleagues and can therefore be used as trainers, for example. This will also help you get your people to use AI more effectively for the benefit of their work and the company.

Incidentally, we have made a very simple video clip on raising awareness of AI available free of charge in various languages in our blog no. 1. Teaching expertise involves showing how AI can be used profitably, but also talking about what can go wrong or how AI can be misused. This applies not only to our own use of AI, but also, for example, to how fraudsters can use AI today, for example in the form of deep fakes.

And afterwards?

The world is also moving fast in the field of AI. While most companies should not let this drive them crazy ("FOMO" or "FOMA"), they should nevertheless ensure that they review their use of AI at least once a year. This includes the contracts on the one hand, but also the experiences of the employees on the other: Have there been any incidents? Where are there problems and what is going well? What should everyone in the company be made aware of?

Moreover, AI should not be an end in itself but should bring with it a real business case. We know of many examples where a lot of money has been spent on AI without it having any real business benefit: AI is then either used for marketing ("Look how we use AI and how advanced we are!") or because those responsible are afraid of being left behind. Fortunately, the hype has now calmed down somewhat.

As part of the review, companies should also ensure that they maintain an overview of their use of AI. Those who do not use the directive for this (see example above) or where this is not sufficient should consider creating a separate inventory for this purpose. Such tools - together with risk assessment forms - will become increasingly important for compliance in the field of AI. In certain industries, they are already de facto mandatory (see box) and the planned AI regulation in Switzerland will also adopt this concept (see blog no. 23).

AI policies for larger and regulated companiesIn larger companies, we recommend a more detailed regulation of the use of AI. This also includes the above requirements, but should also cover the tasks, competences and responsibilities in relation to the development, authorisation and monitoring of AI applications in more detail. We have developed templates for this purpose. It should be noted that in many companies, many of the issues affected by AI applications, such as data protection, are already regulated and there are also established processes and responsibilities for this. For many of our clients, these have since been expanded to include the review of AI-specific regulation - namely the EU AI Act. Even though it differs fundamentally from data protection in terms of its nature, its objectives and characteristics, it is covered by the same bodies in many companies. The more comprehensive policies typically address compliance with the EU AI Act specifically and also address the requirements for the development of new AI applications and the onboarding of AI models. In light of the EU AI Act many of our clients are already reviewing their AI applications to determine whether they are prohibited or high-risk applications and what role the company plays. In practice, we also see more specific AI requirements in relation to contracts with suppliers and other contractual partners. On the one hand, these should address the specific risks and needs of the company when procuring AI-based products and services (in addition to the requirements that generally apply to the involvement of providers, e.g. in the cloud). We have developed a modular system for contractual clauses for our clients that also addresses these points here). On the other hand, clauses should allow companies to control what happens to their data and content in other cases, such as whether and to what extent contractual partners may use it for AI training, which does not only affect suppliers of AI applications. In the Swiss financial industry, compliance with the supervisory expectations of the Financial Market Supervisory Authority FINMA is also required. This was recently explained in more detail in FINMA's Guidance 08/24 (here is a presentation on the topic, in German only). This is also part of our work and we have developed corresponding templates for such a policy, too. Just ask us. |

We support you with all legal and ethical issues relating to the use of artificial intelligence. We don't just talk about AI, we also use it ourselves.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.