- with readers working within the Automotive and Property industries

- within Real Estate and Construction, Immigration, Litigation and Mediation & Arbitration topic(s)

The AMA, with support from Manatt Health, recently developed an interactive toolkit to help providers establish an AI governance framework. Click here to read the full report.

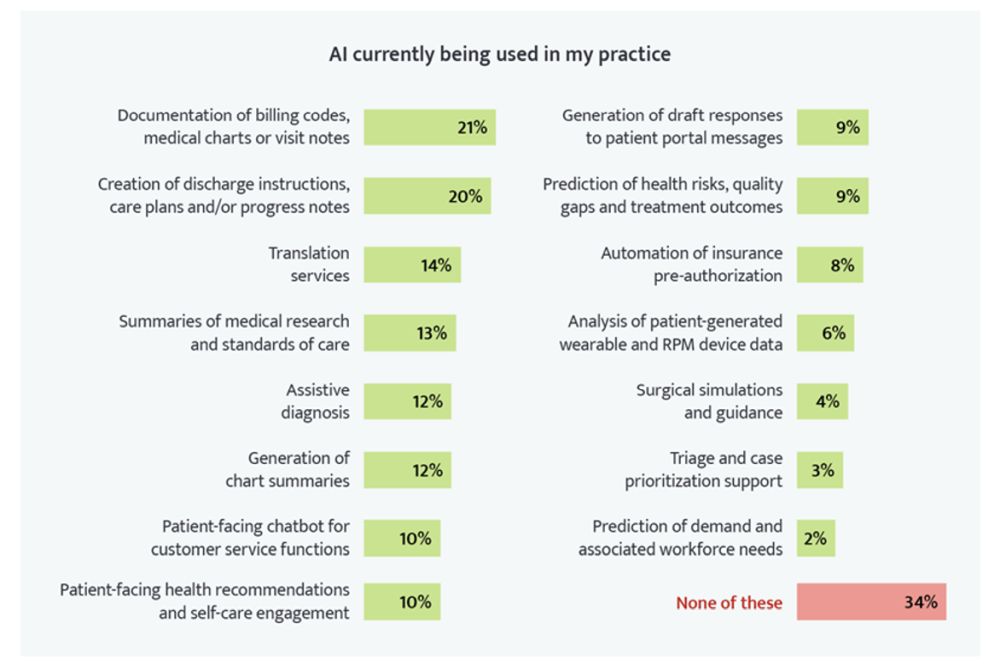

Artificial intelligence broadly refers to the ability of computers to perform tasks that are typically associated with a rational human being—a quality that enables an entity to function appropriately and with foresight in its environment. The AMA uses the phrase "augmented intelligence" (AI), which is an alternative conceptualization used across health care that focuses on artificial intelligence's assistive role, emphasizing the fact that AI enhances human intelligence rather than replaces it. The use cases for AI tools in health care are vast and include both clinical and administrative activities, such as summarizing medical notes, detecting and classifying the likelihood of future adverse events, assisting in diagnosis, and predicting patient volumes and associated staffing needs. Figure 1 shows the number of physicians who reported they are currently using AI tools in their practice in 2024.

Figure 1.

There is excitement about the transformative potential of AI to enhance diagnostic accuracy, personalize treatments, reduce administrative and documentation burden, and speed up advances in biomedical science. At the same time, there is concern about AI's potential to worsen bias, increase privacy risks, introduce new liability issues, and offer seemingly convincing yet ultimately incorrect conclusions or recommendations that could affect patient care.

Establishing AI governance is important to ensure AI technologies are implemented into care settings in a safe, ethical, and responsible manner. Governance empowers health systems to:

- Manage tool identification and deployment

- Standardize risk assessment and risk mitigation strategies

- Maintain comprehensive documentation

- Ensure safe applications with robust oversight

- Decrease clinician burnout

- Promote collaboration and alignment across the institution

The STEPS in this toolkit will provide guidance on building robust AI governance, including establishing leadership, structure, and roles; aligning AI initiatives with strategic goals; developing policy; ensuring proper training and education; and evaluating and monitoring the impact on users.

Click here to read the full report.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.