- within Transport and Insurance topic(s)

It is often said that the use of AI comes with risks. This is true and we ourselves have published a tool that can be used to assess such AI risks and which is enjoying some popularity. However, where AI applications do not harbour high risks, the assessment of such projects can be less stringent. In this part 20 of our AI blog series, we present a risk categorization for discussion.

The use of generative AI in particular requires companies to assess and control the associated risks. However, practice shows that, on closer view, many applications in this area only present limited risks or at least no high risks. We are therefore seeing an increasing need among our clients for a risk-based approach to the assessment and authorisation of AI applications. Added to this is the fact that AI is already used in numerous products and services today without these ever having been assessed in terms of AI-specific risks - because nobody recognises or questions the AI components contained therein. We all use such products ourselves, for example when unlocking a mobile phone with biometric sensors or translating texts.

Scheme for Categorising AI risks

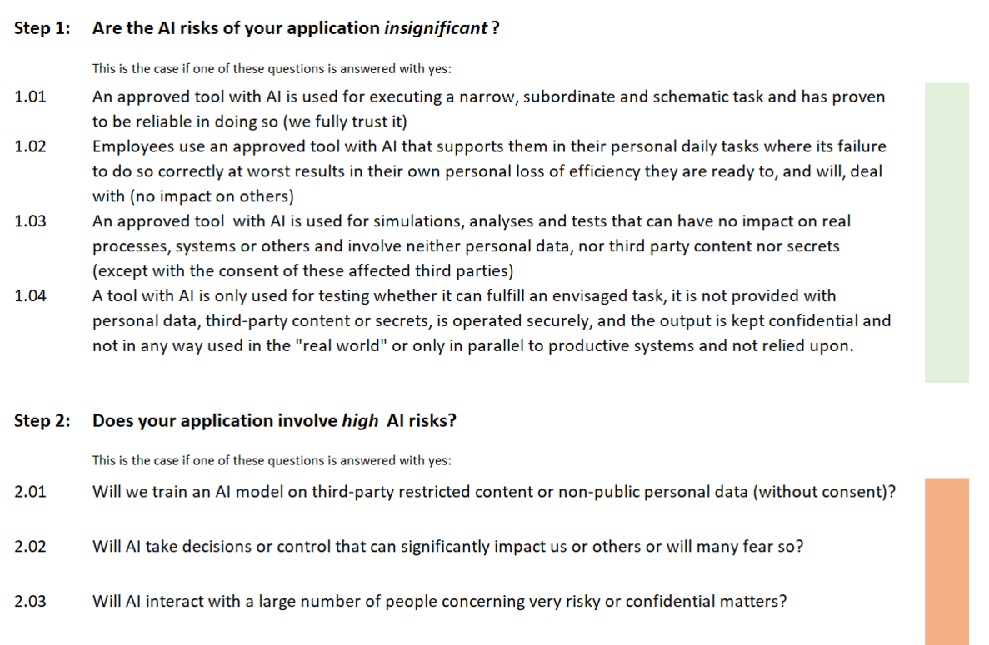

In our tool for assessing and documenting the risks of applications with generative AI ("GAIRA"), we had already listed some questions for identifying GenAI projects with higher risks. We have now supplemented the tool with a more comprehensive questionnaire for classifying AI applications according to their AI risks. It differs from the risk assessment worksheets in that it does not ask about measures, precautions and residual risks, but about risk factors and risk indicators in the field of AI.

This questionnaire distinguishes between the following classes:

- Insignificant risks: This includes, for example, tools that use AI techniques, such as text recognition software, but which we trust completely because we know them well. It seems important to us that we exempt such applications at an early stage so that they do not burden the risk management process and the resources there are available for those projects that really need them.

- High risks: Applications in this class require a more detailed assessment of AI risks, such as systems that are considered high-risk under the AI Act. This includes, for example, the training of models with non-public personal data without the consent of the persons concerned or systems that make important decisions or entail a high potential for damage. In our experience, only a few projects will fall into this category.

- Medium risks: This risk class includes AI applications that are not entirely harmless, but are becoming increasingly common and have the potential to cause damage, albeit to a limited extent. Important decisions are no longer made by the machine, but are sometimes prepared. These are also projects that can have negative reputational consequences for the company if no countermeasures are taken. The chatbot on a company's website typically falls into this category.

- Low risks: All applications which, according to the question catalogue, have neither high nor medium risks and are not insignificant automatically fall into this category, such as the transcription of call content or the creation of customer letters.

The classification is based on the AI-specific risks that an application entails for the company in question, namely financial, operational, reputational and compliance risks. The risks for affected persons are thus only indirectly covered. Their assessment is already regulated by law in various respects, for example in the case of the data protection impact assessment, which only requires a risk assessment in the event of high risks for a data subject.

The classification we have proposed is a draft for public discussion. Each company will have to define its risk classes according to its own understanding and appetite for risk. For example, it is quite conceivable that a company might classify a chatbot application for customer support on its website as a low risk rather than a medium risk.

In this sense, there is no universal approach. Our proposal can be used as starting point for having a discussion about AI risk levels at a company and amended accordingly, taking into account the expectations of stakeholders, ethical considerations and risk appetite.

We welcome suggestions and feedback to improve our risk classes and would like to thank those who have already provided feedback.

Have internal rules for handling the projects

A company will also have to determine the consequences of putting a project into a risk class for itself. It is conceivable, for example, to provide for general approval for projects with insignificant or low risks, while projects with medium risks are examined according to simplified requirements.

However, it should always be borne in mind that, in addition to the actual AI-specific risks for each application, there are always compliance requirements that must be met regardless of the AI risk class of an application. One example is the conclusion of a data processing agreement (DPA) if a provider is commissioned to process personal data. Such a DPA is necessary even if the AI risks of the project are low. The data protection law may require this, regardless of the use of AI.

The risk classes can also be helpful in cases where only a tool is approved, but the specific application is determined by the respective user. For example, if a chatbot is approved for personal use and the basic aspects of data protection are ensured, it is usually not expedient if every application that employees want to implement with it has to be resubmitted to the compliance or legal department.

In the next twelve months, we are foreseeing that companies will become increasingly easy in authorising the use of such tools; as they become more widespread, the experience and willingness of employees to use them responsibly will increase.

The latest version of GAIRA can be downloaded here free of charge. We have also made various other updates to the tool. The licence conditions have been extended, as well: they now allow additional adaptations to your own requirements, translations and the inclusion of your own logos.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.