- within Transport, Criminal Law, Litigation and Mediation & Arbitration topic(s)

The first ever legal framework on Artificial Intelligence could be agreed upon by the EU legislators as soon as this December. The draft Regulation proposed by the Commission in 2021 is in the final stages of the negotiation process, with the Council and Parliament nearing an agreement on the final text.

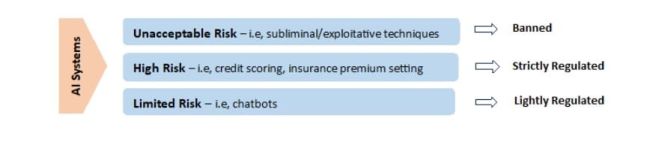

These landmark rules follow a risk-based approach, setting out requirements in proportion to the level of risk that AI systems present for end users.

Systems classified as being of "unacceptable risk", −such as those using subliminal techniques −will be banned, while those classified as "high-risk" −such as credit scoring −will be subject to strict obligations.

The financial sector is expected to be significantly affected by the new rules, as it makes extensive use of data-driven systems and processes and is becoming increasingly reliant on AI-powered models.

Background

The draft regulation employs a three-tier classification model, differentiating AI systems on the basis of the risk they pose to people and categorizing them as "unacceptable risk", "high-risk" and "limited-risk".

Types of AI systems presenting an unacceptable risk are specified in the text, as well as those presenting a high risk.

Focus on high-risk AI systems

Annex III of the Commission's proposal sets out several types of AI systems that are considered high-risk. This includes systems for remote facial recognition, hiring or evaluating employees, grading students, determining eligibility for social benefits, assessing creditworthiness, and predictive policing.

AI systems falling in the High-Risk category will be subject to several strict requirements. These requirements apply principally to the "provider" of an AI system, that is, the person who developed or had the system developed for placing it on the market or putting it into service under its own name. Entities that only use such systems ("users") could qualify as providers where they deploy the system in their own name or trademark or modify the system or its purpose.

Requirements for providers of high-risk systems

- Risk Management System → identification and assessment of risks and risk mitigation measures

- Data Governance → ensuring high quality of the data used for training and validating the system

- Technical Documentation → consisting of all the information necessary on the system and its purpose, so that authorities can assess its compliance

- Record-Keeping → automatic recording of activity ("log files") to ensure traceability of functioning

- Transparency → the system must be sufficiently transparent to enable users to interpret the system's output and use it appropriately.

- Human Oversight → availability of human-machine interface tools, enabling effective oversight of the system's functioning by natural persons

- Accuracy, Robustness and Cybersecurity → through design, measures and techniques

High-risk AI systems will also be subject to a conformity assessment that must be performed by the system provider prior to placing the system in the market, in accordance with Annex 6 or 7 of the Regulation. The provider should also carry out post-market monitoring of the system's proper performance and continuous compliance.

An entity that is a user of a high-risk AI system, provided it is not considered to be the provider of the system, shall be subject to limited obligations, such as using the system according to its instructions, monitoring its operation, notifying the provider of any serious incidents or malfunction, and keep the records (log files) that the system generates.

Limited-Risk AI systems

These are only subject to certain transparency obligations. In particular, AI systems intended to interact with natural persons (such as chatbots) must inform these persons that they are interacting with an AI system. Natural persons must also be informed of AI-generated "deep fakes" such as pictures, audio, or video content.

Reaching an agreement on the final text

During the negotiations, the European Parliament tabled proposals for expanding the list of prohibited systems, including the banning of systems for real-time remote biometric identification in public spaces, social scoring, and biometric categorization using sensitive data (i.e., gender, race, religion). As for the list of high-risk AI systems in Annex III, Parliament suggested that these be considered high-risk where they pose a significant risk to the health, safety, or fundamental rights of natural persons. It moreover proposed expanding the list of high-risk AI systems by including for instance AI systems intended to be used to draw inferences about personal characteristics of natural persons using biometric data, including emotion recognition systems.

The outcome concerning the various points on which the positions of the Council and Parliament diverged will not be known until an agreement is reached on the final text. It is understood that the co-legislators have found common ground on numerous issues, with a few points of contention still remaining.

Although an agreement on the final text could be reached as early as this December, publication of the official text of the Regulation will take a few more months. The final Regulation is expected to include a differentiated transitional period for its applicability to facilitate smooth implementation for both national authorities and affected entities. For the latter, a period of 24 months is considered most likely.

Getting Ready

Providers and operators of systems that may fall within the definition of "AI System", including financial sector firms, need to consider early on whether any of their data-driven systems and processes qualify as AI systems and, if so, whether they fall in the category of high-risk, limited-risk or no-risk.

Although the final text of the new rules has not yet been agreed upon, the co-legislators' views seem to be aligned on the point that the list of high-risk AI systems should include those used for creditworthiness assessments and credit scoring, and for the calculation of the premium of (certain) insurance products.

Given the onerous requirements that high-risk AI systems are subject to, and the particularly heavy penalties envisaged for non-compliance, entities likely to be affected should start considering the challenges related to transitioning to the new regime and take proactive steps now.

Originally published by Cyprus Mail.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.