- within Technology topic(s)

- in European Union

- in European Union

- within Transport, Criminal Law, Litigation and Mediation & Arbitration topic(s)

The EU's new regulatory framework on AI is doubtlessly a pioneering legislative framework that could set a global benchmark, but does it strike the right balance between protection and innovation? Addressing the risks that AI technologies present to people's safety and fundamental rights is of major importance, but so is the need to foster AI innovation in the EU and the uptake of these transformative technologies that could help reinvigorate the EU economy. To be sure, most AI systems are expected to qualify as low/minimal risk and should thus not be (materially) affected by the new rules. On the other end, some AI applications that present unacceptable risks will now be banned while those classified as high-risk will be subject to a particularly heavy regulatory burden. In other words, the administrative burden of this Regulation predominantly falls on entities classified as providers of systems that qualify as high-risk AI systems.

A key concern is that the cost and complexity of compliance could stifle innovation, especially for SMEs and startups with limited means. Another concern is the legal uncertainty stemming from the broad definition of "AI system" that could catch systems and software that would typically not be thought of as artificial intelligence, such as certain manually constructed expert-rules-based systems. Furthermore, early hints from the new US administration suggest that the US may adopt a light-touch regulatory approach to AI, which could impact Europe's AI innovation and competitiveness.

As the February 2025 deadline for the application of Chapter II of the Act ("Prohibited AI Practices") moves closer, entities especially in industries more likely to be using AI, such as Financial Services, Marketing, E-commerce, Gambling, Gaming, Cybersecurity, and Healthcare should, as a mater of priority carry out a company-wide systems screening to identify and timely remove AI applications that could fall foul of the Chapter II ban. The Regulation is particularly tough on violations of the ban, providing for fines of up to €35 million or 7% of global turnover.

To better understand the extent of the administrative burden and the Act's impact on AI development and deployment in the EU, a closer look at the new rules and their practical implications is warranted.

1. Nature, Scope, and Key Definitions

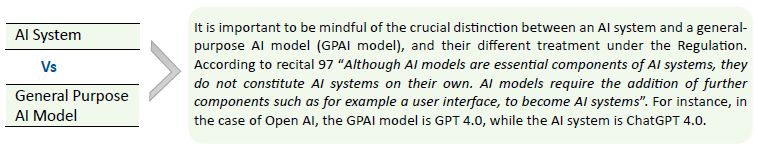

The AI Act lays down rules with respect to AI systems and general-purpose AI models, employing mainly result-oriented provisions to be fleshed out at a later stage by technical specifications (harmonised industry technical standards, codes of practice, and possibly also Commission common specifications). The Act is moreover, technology-neutral; the goal is not to regulate technology (which is neither inherently beneficial nor harmful) but to regulate particular uses of technology that pose material risks to people's safety and fundamental rights. This approach makes the Act considerably future-proof and provides flexibility for adapting the framework to changes in uses or in the state of the art. The new framework applies across sectors and is without prejudice to existing EU laws such as GDPR, consumer protection, employment, and product safety.

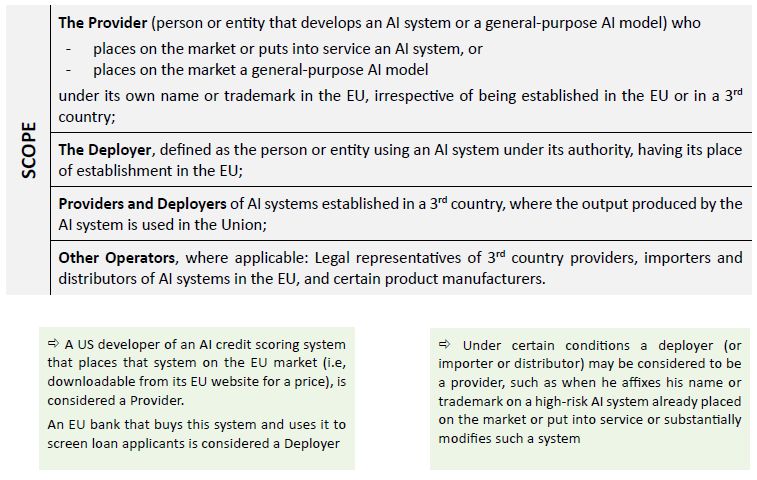

Scope: The Regulation has a broad territorial scope, catching even 3rd country AI systems that affect the EU. In particular, it applies to:

It is important to bear in mind that the AI Act applies only if an AI system or model has been placed on the market or put into service or used in the EU; the stages relating to its development and testing do not fall within the scope of the Act. Other out-of-scope cases include use of AI systems for military, defence or national security purposes, AI systems and models for scientific research purposes, certain AI systems released under a free and open-source license, and cases where the deployer is a natural person using an AI system for a personal non-professional activity.

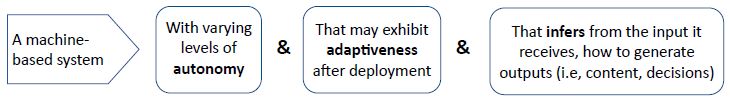

What is an AI System (Article 3(1) & recital 12):

- Autonomy: the system must have some level of autonomy, hence probably even a low level of autonomy (in the sense of some independence from human involvement) would do.

- Adaptiveness: The word "may" can be understood to mean that this is not an essential feature (note that the great majority of machine learning systems learn by analysing large amounts of data during a training phase, which precedes deployment. Hence, in a strict sense, only those very few systems that continue to learn post deployment would qualify as truly adaptive).

- Capability to infer: the core of the definition is the capability to infer outputs from received inputs. An AI system is typically considered to be a system trained to detect paterns on a large data set (i.e, x-rays) during its training stage, which can then -at deployment stage -recognise these paterns in new data that it has not seen before (new x-rays) and draw accurate conclusions or predictions in a way that resembles human logic. The Act's definition however, read in conjunction with recital 12, is quite broad and can catch traditional automated systems or software that are not sophisticated or "intelligent" in the sense commonly associated with AI, yet they may be said to "infer" outputs from inputs in a way that constitutes basic or rudimentary "modelling" or "reasoning".

This broad definition, while helpful in keeping it future-proof and less prone to circumvention, casts the regulatory net beyond what would typically be considered AI and likely catches less sophisticated systems. In the case of some systems, operators may struggle to determine whether to classify them as AI or not. More clarity on this should result from the guidelines that the Commission is expected to issue on the application of the definition.

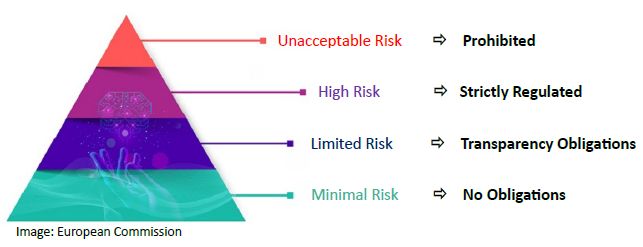

A Risk-Based Regulatory Approach

Systems that qualify as AI systems are subject to a risk-based regulatory treatment consisting of 4 risk levels. Those falling in the top category are considered of "unacceptable risk" (i.e, social scoring) and are banned; those in the high-risk category (i.e, credit scoring) are subject to stringent regulation; AI systems classified as limited risk (i.e, AI chatbots) are only subject to certain disclosures. All other AI systems are considered minimal risk and are not subject to obligations (save for the AI literacy provision of Art.4). According to the European Commission, the vast majority of AI systems currently used in the EU fall in this later category.

Most of the obligations and requirements of the Act fall on providers of AI systems that qualify as high-risk. Deployers of such systems are also affected, albeit to a much lesser extent. Providers of GPAI models, must also comply with several requirements (see GPAI section below), since these models are particularly powerful, versatile, and can be integrated and form the basis of a large number of AI systems. Models that qualify as systemic risk GPAI models (most likely GPT-4.0 or Gemini core AI models), are subject to additional more stringent rules.

To read this article in full, please click here.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.