- within Tax, Litigation and Mediation & Arbitration topic(s)

The Bottom Line

- Many states are enacting laws governing the use of AI in political advertising, with most requiring a disclosure specifying when a political ad contains content that was created, altered, or manipulated using AI.

- The FCC has proposed a new rule that would require television and radio broadcast stations to include a standardized on-air disclosure identifying whenever political ads include AI-generated content.

- Some federal efforts to regulate AI in political advertising, as well as deepfakes more broadly, have not been as successful.

- AI-generated online memes may not be considered political advertising and are therefore unlikely to be impacted by these new legislative and regulatory efforts.

While the 2024 Democratic National Convention takes place this week in Chicago, it is worth reflecting on the remarkable events of this year's general election cycle. The events of July alone – including an assassination attempt and the sudden replacement of the Democratic Party's presidential candidate – have been disorienting enough for voters and commentators. Add to the mix the recent advancements in generative artificial intelligence (AI) technologies, and a general sense of panic would be understandable.

Fortunately, legislators and regulators have been working tirelessly (though with mixed success) to adopt new laws and regulations to mitigate the potential harms that AI can pose, particularly in the context of political advertising.

Last fall, we explored some initial developments that media companies such as Google and Meta were adopting to combat AI-generated election misinformation on their platforms. There have been many developments on the state and federal level since then that will impact the way political campaigns can and cannot use generative AI tools through the rest of the 2024 election cycle and beyond.

State Legislation

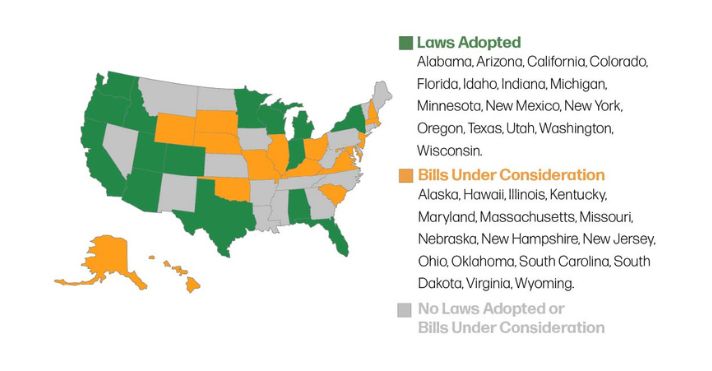

As of August 2024, 16 states have adopted new laws governing how AI-generated content can be used in political advertising. Another 16 states have legislative bills on the issue that are under consideration.

Among the states that already have laws on the books, the majority do not prohibit political advertisers from using generative AI entirely, but simply require that such advertising disclose whether they include AI-generated content. (Minnesota is one notable exception, where it is prohibited to circulate unauthorized deepfakes of a political candidate in a manner intended to injure the candidate or influence the election.) In many of these states, the disclosure requirement can be satisfied by clearly and conspicuously including language such as "This [image/video/audio] has been manipulated or generated by artificial intelligence" in the advertising. In certain states, the disclosure requirement is only imposed during an "electioneering" period (typically 60 or 90 days) prior to a primary or general election. Such electioneering periods prior to this year's general election have already begun in certain jurisdictions and many more will begin in early September.

These laws are generally designed to target so-called "deepfake" content or "synthetic media" which falsely, but realistically, depicts a candidate for office doing or saying something that they did not actually do or say, in a manner that is presented as if it were authentic. The majority of states with these laws also allow candidates who are deceptively depicted in AI-generated content to bring an action for injunctive relief or damages against the sponsor of the advertisement.

Federal Communications Commission Proposed Rule

In late July, the Federal Communications Commission (FCC) released a notice of proposed rulemaking (the Notice) proposing a new rule requiring all television and radio broadcast stations to include a standardized on-air disclosure identifying whenever political ads they broadcast include AI-generated content. Like the state legislation described above, the FCC is primarily focused on the use of generative AI to create deepfakes, which can be used to spread disinformation and misinformation. This recent initiative by the FCC follows actions it took earlier this year in response to an incident before the New Hampshire primary election in which voters in the state began receiving robocalls in which an AI-generated deepfake of President Biden's voice encouraged them not to vote on election day. In the wake of this deceptive effort to suppress voter activity, the FCC has been taking steps to expand the reach of the Telephone Consumer Protection Act (TCPA) to impose stricter regulations on automated robocalls using AI-generated voices.

To achieve this goal, the rule would require broadcast stations to (1) ask advertisers whether their ads include any AI-generated content, and (2) adopt a uniform disclosure message to present alongside the ad. The FCC Chair has stated that the intent of the proposed rule is simply to identify and inform consumers when a political ad includes AI-generated content and that the Commission does not intend to ban such advertising or pass judgment on the truth or falsity of the content incorporated in the advertising.

Due to the lengthy process involved in regulatory rulemaking, such a new rule is unlikely to be finalized or come into effect before the 2024 general election.

Stalled Federal Efforts

Not all efforts to regulate the use of AI in political advertising have been successful.

The Federal Election Commission (FEC) had been considering a petition asking it to adopt a newly proposed rule regulating the use of AI in political advertisements. The proposed rule would have amended existing regulations that prohibit a candidate or their agent from "fraudulently misrepresenting other candidates or political parties" to make clear that this prohibition would apply to deliberately deceptive AI-generated campaign ads. Given the FEC's new authority over political advertising on internet channels, new rules targeting deceptive AI-generated ads could have been a significant tool in the fight against campaign-sponsored misinformation online. However, despite extensive public comment and media attention, and to the surprise of many commentators, it appears that the FEC will close the petition without further action following pressure from Republican FEC commissioners, who have indicated that the Commission should wait for direction from Congress and study how AI is actually being used in political advertising before advancing any new rules.

Action in Congress has also stalled. Members of the House and Senate have introduced legislation that would require disclaimers on political ads that include content created with generative AI, including the "REAL Political Advertisements Act" introduced by Rep. Yvette Clark in 2023 and the "AI Transparency in Elections Act" introduced by Sens. Amy Klobuchar and Lisa Murkowski in early 2024. There has not been significant movement on any proposals, and it is unlikely that any bills governing the use of AI in political advertising will be passed before the November general election.

Outside of political advertising, the U.S. Senate has been considering the so-called "NO FAKES Act," which would establish a federal right of publicity law specifically targeted at giving individuals control over so-called "digital replicas" of their voice and likeness. While this new law would give public figures and everyday citizens a stronger ability to combat unauthorized deepfakes, it would not apply to bona fide First Amendment-protected speech such as news reporting, commentary, satire, and the like. It is also unlikely to come into effect in time to impact the upcoming general election.

Some Things Stay The Same

Although the new state laws and FCC regulations on the use of AI in political advertising promise to reduce misinformation and deception, it is important to note that these laws do not necessarily impact the ability of regular people to use generative AI tools to create and spread deceptive memes and other misinformation about political candidates online. This type of user-generated content may be distinct from political advertising governed by the applicable state laws discussed above. Whether and to what extent people are able to use AI to create deceptive deepfake images, videos or audio of candidates that do not qualify as "political advertising", and to spread them on social media, depends primarily on the guardrails that AI companies and social media platforms impose on their technology and their users.

Many AI providers such as Google (Gemini), OpenAI (ChatGPT), Meta (Llama) and Anthropic (Claude) have publicly announced a desire to create "responsible" AI products. These platforms have introduced user policies and technical barriers that restrict users from easily generating deceptive deepfakes of known individuals or public figures, and that superimpose disclosures on AI-generated content labeling it as such. Many users have nevertheless found ways to circumvent these restrictions. Still other AI providers, such as X (formerly known as Twitter) and its newly released Grok-2 AI tool, intentionally seek to push boundaries and allow users to generate nearly anything they can imagine, without requiring any disclosures, in the name of free speech.

In this context, the many state laws and federal regulations that aim to minimize the deceptive use of AI in political advertising, and even the proposed "NO FAKES Act," may have only a limited impact on the spread of AI-generated disinformation online.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.