In recent years, jurisdictions have been scrambling to develop legislation regulating the development and use of AI. Some jurisdictions have already implemented laws, such as the AI Act in the EU, while others continue to assess how to address the unique challenges AI raises in such a rapidly changing technology landscape.

Within Canada, there are AI regulations that apply in some sector-specific areas but there are no comprehensive laws regulating the use and development of AI (and the wait for such laws may be several years).

However, several organizations have issued standards and risk management frameworks for AI use. This article sets out some of the main frameworks that businesses can use as they develop their internal AI governance practices.

Why use an AI risk management framework?

As with the development of any product or adoption of any system, businesses need standards to assess function, benefits, risks and costs. AI risks in particular can present unique risks or aggravate existing risks within your business.

Ultimately, your AI governance program should be specific to your industry and business. There is no one-size-fits-all approach. Businesses should also develop internal AI risk classification systems that can address different use cases. Using frameworks from accredited organizations as part of your AI governance program can help your business explain its values, maintain accountability, and build trust with internal and external stakeholders.

Risk management frameworks

While there is an ever-expanding list of frameworks to consider, they tend to share common principles such as transparency and data protection. We encourage those using or developing AI to review these frameworks and consider to which extent they may incorporate specific risk mitigation measures.

International Organization for Standardization (ISO)

The ISO is a non-governmental organization that publishes thousands of standards and guidance documents agreed upon by international experts. Amongst those pertaining to AI and risk management:

- ISO/IEC 42001:2023 Artificial Intelligence – Management system purports to be the world's first AI management system standard and provides guidance for using AI responsibly and effectively. It proposes an integrated approach for managing AI projects and can apply to organizations of any size involved in developing, providing or using AI-based products or services.

- ISO 31000:2018 Risk Management Guidelines explain the general risk management process through principles (e.g. inclusive, dynamic, integration, continuous improvement), process and framework.

- ISO/IEC 23894:2023 Artificial intelligence – Guidance on risk management provides insight for AI-specific risks applicable to organizations that develop, produce, deploy or use products, systems and services that utilize AI. The guidance also aims to assist organizations integrate risk management into their AI-related activities and functions.

- ISO/IEC 5259:2024 Artificial Intelligence – Data quality for analytics and machine learning

- ISO/IEC DIS 42006 Requirements for bodies providing audit and certification of artificial intelligence management systems (presently in draft)

Human Rights, Democracy, and the Rule of Law Impact Assessment for AI Systems (HUDERIA)

HUDERIA presents a risk-based approach to assessing and mitigating adverse impacts developed for the Council of Europe's Framework Convention.1 The framework proposes a collection of interrelated processes, steps and user activities, including (1) preliminary context-based risk analysis, (2) stakeholder engagement, (3) human rights, democracy and rule of law impact assessment, and (4) human rights, democracy and rule of law assurance case. Key principles within the framework include respect for and protection of human dignity, protection of human freedom and autonomy, harm prevention, non-discrimination, transparency and explainability, and data protection and the right to privacy.

OECD framework for the classification of AI systems

Provides a user-friendly tool to evaluate AI systems from a policy perspective. This system considers the widest range of AI across the following dimensions: (1) People & Planet, (2) Economic Context, (3) Data & Input, (4) AI model, and (5) Task & Output. Each dimension contains a subset of properties and attributes to define and assess policy implications and to guide an innovative and trustworthy approach to AI as outlined in the OECD AI Principles.2

United States

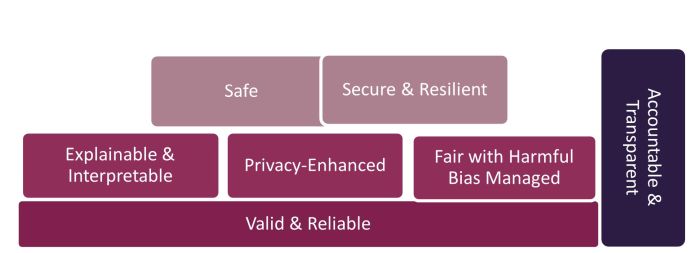

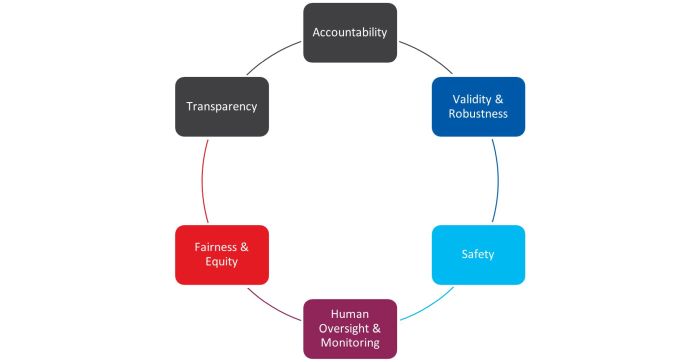

In January 2023, the National Institute for Standards and Technology ("NIST") published its Artificial Intelligence Risk Management Framework (AI RMF 1.0) to help manage the risks AI poses to individuals, organizations and society. The AI RMF articulates characteristics of trustworthy AI and offers guidance for addressing them. Trustworthy AI requires balancing these characteristics based on the AI system's context of use.

In July 2024, NIST released the Artificial Intelligence Risk Management Framework: Generative Artificial Intelligence Profile (NIST-AI-600-1) pursuant to President Biden's Executive Order on AI. NIST describes this as a cross-sectoral profile of and companion resource for the AI RMF. The Profile recognizes that GAI risks can vary along several dimensions including the scope and stage of lifecycle. Examples of risks the Profile identifies include confabulation (hallucinations), dangerous content, data privacy, harmful bias or homogenization and environmental impacts.

Canada

In September 2023, the Minister of Innovation, Science and Industry announced a Voluntary Code of Conduct on the Responsible Development and Management of Advanced Generative AI Systems, which we discussed in a prior publication. The purpose of the Code is to provide Canadian companies with common standards and demonstrate responsible development and use of generative AI systems until regulation comes into effect. Developers and managers voluntarily commit to working to achieve the following in advanced generative systems.

Singapore

In 2019, the Singapore Personal Data Protection Commission (PDPC) released its first edition of the Model AI Governance Framework for consultation. The Model Framework provides guidance to private sector organizations to address key ethical and governance issues when deploying AI solutions. The PDPC released the second edition of the Model Framework in January 2020.3 The Model Framework consists of 11 AI ethics principles: (1) Transparency, (2) Explainability, (3) Repeatability/reproducibility, (4) Safety, (5) Security, (6) Robustness, (7) Fairness, (8) Data governance, (9) Accountability, (10) Human agency and oversight, and (11) Inclusive growth, societal and environmental well-being.

While not a framework, AI Verify is an AI governance testing framework and software toolkit that validates the performance of AI systems against internationally recognized principles through standardized tests, and is consistent with international AI governance frameworks such as those from the European Union, OECD and Singapore.4

Footnotes

1. The Alan Turing Institute, available online.

2. Organization for Economic Cooperation and Development (OECD), OECD Framework for the Classification of AI Systems (February 2022) at p 3, available online.

3. Personal Data Protection Commission, Singapore's Approach to AI Governance.

4. AI Verify Foundation, What is AI Verify?

Read the original article on GowlingWLG.com

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.