- within Compliance topic(s)

Why Stress-Testing AI Models Matters — Now More Than Ever

Artificial intelligence (AI) and machine learning (ML) are now embedded in the core of banking — powering decisions in credit, fraud, anti-money laundering (AML), and more. These systems bring scale and speed — but also a new type of risk.

AI models are often black boxes: They learn from

data, but under stress — like a downturn, market shock, or

regulatory change — they can behave unpredictably. Unlike

traditional models, they do not degrade gradually. They can fail

fast — misclassifying risk, introducing bias, or generating

false positives — which creates reputational, financial, and

compliance risk. For example, during COVID-19, many credit

underwriting models that had been trained on years of stable

economic data suddenly rejected large numbers of qualified

borrowers, or conversely underestimated default risk, because they

had never encountered pandemic-like conditions in their training

history. This illustrated how quickly AI models can break down when

exposed to novel stress scenarios.

Regulators are Responding

- U.S. guidance like SR 11-7 is being reinterpreted for AI.

- The Office of the Comptroller of the Currency's (OCC) Principles for Responsible AI call out fairness, explainability, and model fragility.

- The EU AI Act categorizes many financial AI models as "high-risk," requiring formal oversight.

In this new landscape, the old approach to model validation is not enough. Stress-testing AI models must go beyond accuracy — it must test for fairness, transparency, and resilience under pressure.

At Ankura, we believe AI is not too big to fail — it is too complex to ignore.

This paper introduces our practical framework for stress-testing AI in risk-sensitive applications, helping institutions future-proof their models and stay ahead of regulation.

Why Traditional Stress-Testing Falls Short for AI

Traditional stress-testing was designed for simpler, transparent models. But AI systems behave differently: They are nonlinear, sensitive to data shifts, and harder to interpret. That is why Ankura's stress-testing framework is built specifically for AI — addressing the unique ways these models can fail under pressure.

| Aspect | Traditional Models | AI/ML Models |

|---|---|---|

|

Structure |

Linear, transparent, easy to interpret |

Nonlinear, complex, "black box" |

|

Data Sensitivity |

More stable under moderate shifts |

Highly sensitive to distributional changes |

|

Response to Stress |

Gradual degradation |

Sudden, unpredictable breakdowns |

|

Governance and Review |

Easier to document and validate |

Requires advanced tools for explainability |

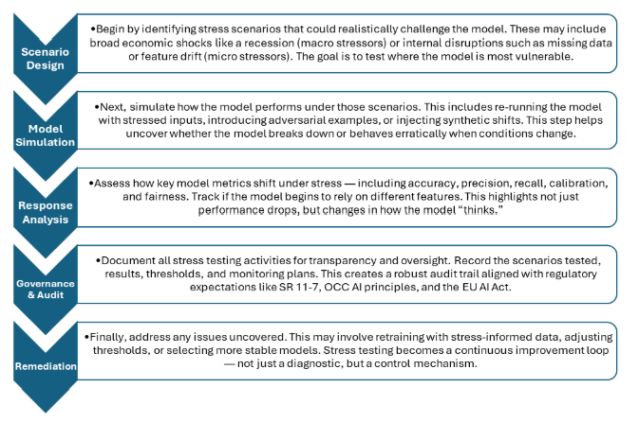

Ankura's 5-Step AI Stress-Testing Framework

Ankura's five-step framework is designed specifically for AI/ML systems, combining technical rigor with governance best practices.

Case Study: How a Loan Default Model Broke Under COVID Stress

To demonstrate how AI models can falter under real-world stress, we stress-tested a loan default model using Lending Club data (2007–2018). Our aim was to see how the model, trained in normal times, would behave under crisis-like conditions — specifically, the COVID-19 economic shock.

Step 1: Applying Macro and Micro-Stressors

We trained the model on pre-COVID-19 data, then tested it on a synthetic dataset mimicking the COVID-19 era1 — incorporating higher unemployment, income drops, and elevated default rates. We also added micro-stressors like distorted borrower features (e.g., inflated debt-to-income ratios, altered credit scores).

The test set was designed to reflect both real economic turmoil and data instability — the kind of conditions a production model would face in crisis.

Step 2: Measuring Model Behavior

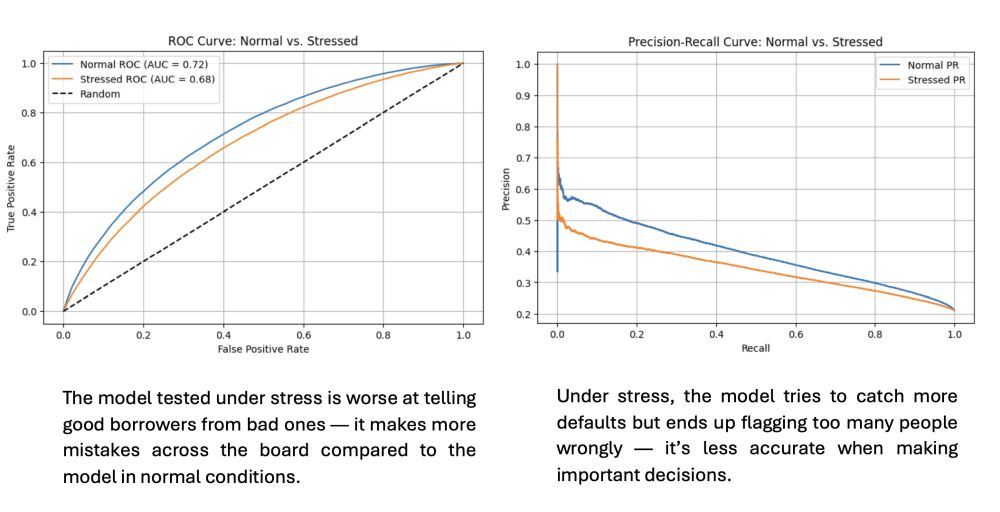

We used an XGBoost-based classification model to compare predictions under two scenarios:

- Pre-Stress: Normal economic inputs

- Post-Stress: COVID-19-like stressed inputs

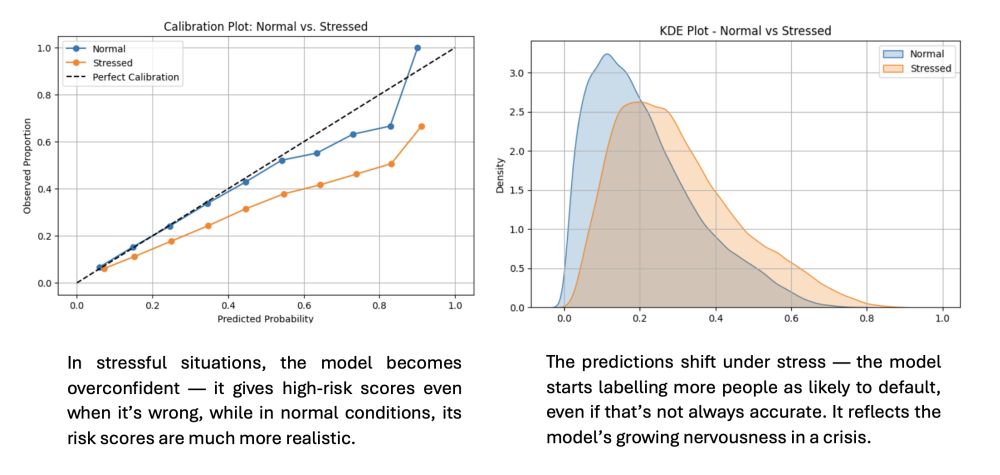

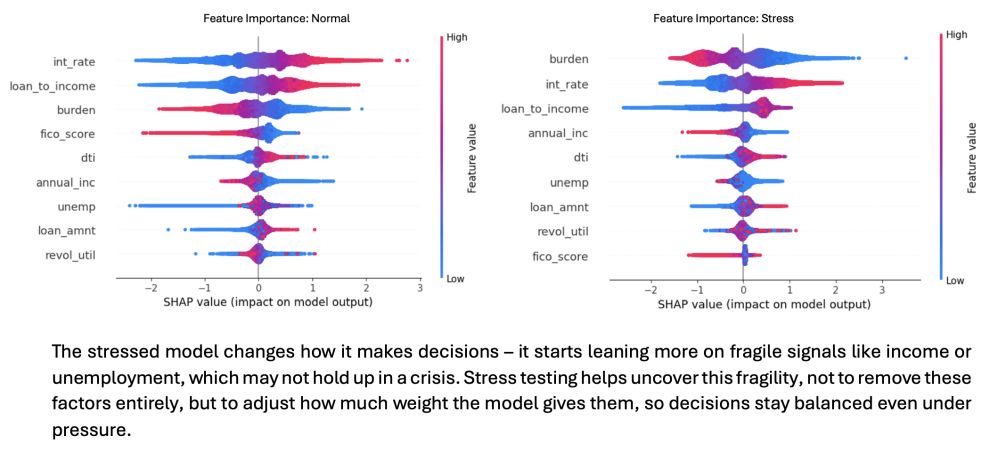

We evaluated both performance metrics and behavioral shifts using Receiver Operating Characteristic (ROC) curves, precision-recall, calibration plots, and feature importance charts.

| Metric | Pre-Stress | Post-Stress | Interpretation |

|---|---|---|---|

| True Positives (TP) | 4440 | 9577 | Better detection of defaults |

| False negatives (FN) | 34143 | 29006 | Fewer missed defaults |

| False Positives (FP) | 3929 | 14292 | Spike in false alarms |

| True Negatives (TN) | 140959 | 130596 | Decrease in correct non-default classification |

| Precision | 53% | 40% | Declines due to higher FP |

| Recall (Sensitivity) | 12% | 25% | Improves due to lower FN |

| Accuracy | 0.792 | 0.764 | Modest drop in overall correctness |

| AUC (ROC) | 0.718 | 0.677 | Shows degraded discrimination power |

| Calibration | Near-perfect | Overconfident | Model overstates certainty – risk of misinformed decisions |

What We Found

- Performance Drift: While the model captured more defaults (higher recall), it also flagged many good borrowers as risky — precision dropped significantly.

- Confidence Collapse: Calibration plots showed the model became overconfident under stress — inflating default probabilities.

- Feature Fragility: SHapley Additive exPlanations (SHAP) analysis revealed an increased reliance on unstable features — like income or employment, leading to erratic behavior.

- Output Distribution Shift: The Kernel Density Estimation (KDE) plot showed a visible rightward shift in predicted risk scores — signaling overreaction to stress inputs.

Key Takeaways for Business Leaders

- More defaults flagged — but less accuracy: Precision dropped, making the model less useful for credit decisions.

- The model became fragile: Over-reliance on volatile inputs made outputs unstable and less trustworthy.

- Real risk of operational failure: In live systems, this could mean bad loans get approved, or good customers get unfairly rejected.

What We Recommend

Make AI models more resilient.

- Retrain on stress-informed data: Include past crisis periods in training data.

- Rebalance model thresholds: Optimize tradeoff between false positives and negatives.

- Add monitoring alerts: Track shifts in key features like income or Debt-to-Income (DTI).

- Refresh calibration regularly: Ensure risk scores remain meaningful.

- Use hybrid guardrails: Add rule-based overrides during high-risk periods.

Ankura's AI Model Risk Services

Ankura helps institutions deploy AI responsibly by combining technical expertise with regulatory insight. Our services ensure models are robust, fair, explainable, and audit-ready.

- Model Validation: End-to-end validation covering data, design, and outcomes — aligned with SR 11-7 and global best practices.

- Stress-Testing: Simulation of shocks and distribution shifts to test model resilience under adverse conditions.

- Fairness Audits: Evaluation of bias and disparate impact across sensitive attributes.

- Explainability: Use of SHAP, Local Interpretable Model Agnostic Explanation (LIME), and other tools to improve transparency and support governance.

- Governance Support: Setup of model documentation, monitoring plans, and audit trails for compliance.

Whether you're building your first ML model or scaling AI across the enterprise, we bring rigor and oversight to your model risk management.

Footnote

1. To build the synthetic COVID scenario, we anchored macroeconomic assumptions (unemployment, income shocks, default rates) to ranges observed during 2020–2021 using public sources such as the Federal Reserve Economic Data (FRED). These were then translated into borrower-level features by proportionally adjusting key inputs (e.g., increasing debt-to-income ratios, reducing disposable income, shifting credit score distributions). While simplified, this approach allowed us to capture realistic stress dynamics at the borrower level.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.

[View Source]