- with readers working within the Banking & Credit industries

- within Cannabis & Hemp, Coronavirus (COVID-19) and Insolvency/Bankruptcy/Re-Structuring topic(s)

As we previously discussed, earlier this year the National Institute of Standards and Technology (NIST) launched the Trustworthy and Responsible AI Resource Center. Included in the AI Resource Center is NIST's AI Risk Management Framework (RMF) alongside a playbook to assist businesses and individuals in implementing the framework. The RMF is designed to help users and developers of AI analyze and address the risks of AI systems while providing practical guidelines and best practices to address and minimize such risks. It is also intended to be practical and adaptable to the changing landscape as AI technologies continue to mature and be operationalized.

The first half of the RMF discusses these risks and the second half discusses how to address the risks. When the AI RMF is properly implemented, organizations and users should see enhanced processes, improved awareness and knowledge, and greater engagement when working with AI systems. The RMF describes AI systems as "engineered or machine-based systems that can, for a given set of objectives, generate outputs such as predictions, recommendations, or decisions influencing real or virtual environments. AI systems are designed to operate with varying levels of autonomy."

Understanding and Addressing Risks, Impacts, and Harms with AI Systems

Utilizing AI systems offers individuals and organizations (collectively referred to in the RMF as "actors") a myriad of benefits including increased productivity and creativity. However, the RMF recognizes that AI systems, when used incorrectly, can also bring harm to individuals, organizations, and the general public. For example, the RMF outlines that AI systems can amplify discrimination, create security risks for companies, and exacerbate climate change issues. The RMF allows actors to address both the positive and negative impacts of AI systems in a coordinated fashion.

As many cybersecurity professionals understand, risk is a function of the likelihood that an event will occur and the harm that could result if that event occurs. Negative outcomes can include harm to people, organizations, or an ecosystem. In practice, risk is hard to quantify with any precision since there may be significant uncertainty in the likelihood of the event occurring and it is often difficult to recognize the impacts of the harms should any of them occur. The RMF describes some of these challenges, including:

- Risks related to third-party software, hardware, and data: While third-party data or systems can be useful to accelerate the development of AI systems, they represent unknowns that can complicate measuring risk. Furthermore, the users of AI systems may not use such systems in the way that their developers and providers intended. Developers and providers of AI systems may be surprised when the use of the AI systems in a production system is vastly different than the use in a controlled development environment.

- Availability of reliable metrics: Calculating the potential impact or harm when using AI systems is complex and may involve many factors.

- Risk at different stages of the AI lifecycle: Actors using an off-the-shelf system will have to face different risks than an actor building and training their own system. The RMF recognizes that companies need to determine their own tolerance for risk, and some organizations may be willing to bear more risk than others, depending on the legal or regulatory contexts. However, the RMF recognizes that addressing and minimizing all risk is not efficient or cost effective, and businesses must prioritize what risks to address. Similar to how businesses should address cybersecurity and data privacy risks, the RMF suggests that risk management be integrated into organizational practices as different risks will present themselves at different stages of organizational practices. The RMF also recognizes that trustworthiness is a key feature of AI systems. Trustworthiness is tied to the behavior of actors, datasets used by AI systems, behavior of the users and developers of AI systems, and how actors oversee these systems. The RMF suggests the following characteristics affect the trustworthiness of an AI system:

- Validation and reliability: Actors should be able to confirm that the AI system has fulfilled specific requirements and that it is able to perform without failure under certain conditions.

- Safety: AI systems should not endanger human life, health, property, or the environment.

- Security and resiliency: AI systems should be able to respond to and recover from both unexpected adverse events and changes.

- Accountability and transparency: Actors should be able to access information about AI systems and their outputs.

- Explainability and interpretability: AI systems should be able to provide the appropriate amount of information to actors and provide a certain level of understanding.

- Privacy-enhancement: When appropriate, design choices for AI systems should incorporate values such as anonymity, confidentiality, and control.

- Fairness with harmful bias managed: AI systems risk perpetuating and exacerbating already-existing discrimination. Actors should be prepared to prevent and mitigate such biases.

AI RMF Risk Management Core and Profiles

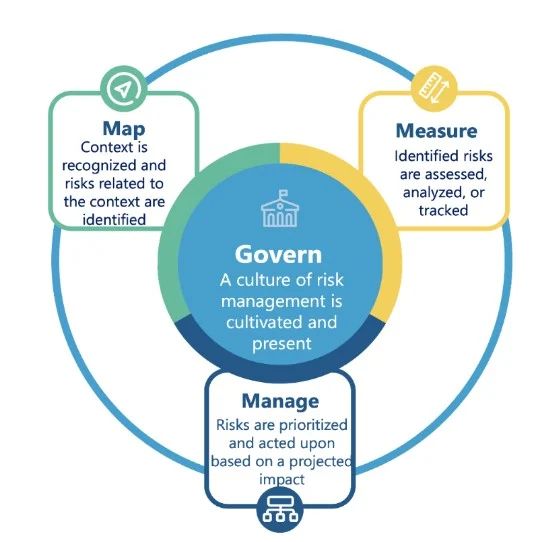

At the core of the AI RMF (RMF Core) are fundamental functions that are designed to provide a framework to help businesses develop trustworthy AI systems. These functions are: govern, map, measure, and manage, with the "govern" function designed to influence each of the others.

Figure 1: Risk Management Core (NIST AI 100-1, Page 20).

Each of these functions is further broken down into categories and subcategories designed to achieve the high-level functions. Given the vast number of subcategories and suggested actions, the RMF Core is not meant to serve as a checklist that businesses use to merely "check the box". Instead, the AI RMF counsels that risk management should be continuous, timely, and performed throughout the AI systems lifecycle.

The AI RMF also recognizes that there is no "one size fits all" approach when it comes to risk management. Actors should build a profile specific to the use case of the AI system and select the appropriate actions to perform and achieve the four functions. While the AI RMF describes the process, the AI RMF playbook provides detailed descriptions and useful information on how to implement the AI RMF for some common situations (generally called profiles). The RMF profiles will differ depending on the specific sector, technology, or use. For example, a profile for the employment context would be different, and would address different risks, than a profile for detecting credit risks and fraud.

The RMF Core is comprised of the following functions:

- Govern. Strong governance is important to developing internal practices and norms critical for maintaining organizational risk management. The govern function describes categories to help implement the policies and practices of the three other functions, creating accountability structures, workplace diversity, and accessibility processes to have AI risks evaluated by a team with diverse viewpoints, and developing organizational teams that are committed to a culture of safety-first AI practices.

- Map. The map function helps actors to contextualize risks when using AI systems. By implementing the actions provided under map, organizations will be better able to anticipate, assess, and address potential sources of negative risks. Some of the categories under this function include establishing and understanding the context of AI systems, categorizing the AI system, understanding the risks and benefits for all components of the AI system, and identifying the individuals and groups that may be impacted.

- Measure. The measure function uses quantitative and qualitative tools to analyze and monitor AI risk and for actors to evaluate the use of their AI systems. The measurements should track various targets such as trustworthiness characteristics, social impact, and quality of human-AI interactions. The measure function's categories include identifying and applying appropriate methods and metrics, evaluating systems for trustworthy characteristics, implementing mechanisms for tracking identified risks over time, and gathering feedback about efficacy of measurement.

- Manage. After determining the relevant risks and proper amount of risk tolerance, the manage function assists companies with prioritizing risk, allocating resources appropriately to address the highest risks, and enabling regular monitoring and AI system improvement. The manage function's categories include prioritizing risks following assessment from map and measure, strategizing on how to maximize AI benefits and minimize AI harms, and managing AI risk from third parties.

In this manner, the playbook provides specific, actionable suggestions on how to achieve the four functions.

Impact to Businesses

The AI RMF helps businesses develop a robust governance program and address risk for their AI systems. While the use of the AI RMF is currently not required under any proposed law (including the EU's Artificial Intelligence Act), the AI RMF, as with other NIST standards and guidance, will undoubtedly prove useful in helping businesses comply with the risk analysis requirements of such laws in a way that is structured and repeatable. Therefore, businesses considering providing or using AI systems should also consider using the AI RMF to analyze and minimize the risks. Businesses may be asked to show the high-level documentation produced as part of their use of the AI RMF to regulators and may also consider providing such documentation to their customers to reduce concerns and enhance trust.

The authors gratefully acknowledge the contribution of Mathew Cha, a student at UC Berkeley School of Law and 2023 summer associate at Foley & Lardner LLP.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.