- within Intellectual Property topic(s)

This decision relates to a European patent application that concerns the process for making an audio-visual interface. The Board disagreed with the first instance division and considered that the technical difference in shooting technology, and the implementation of the obtaining and projection method was neither a common measure nor mere presentation of information, but its use was not obvious from the prior art and thus exceeded the general design capabilities of skilled person. Here are the practical takeaways from the decision T 0799/19 (Cinematographic image interface/BLUE CINEMA) of October 11, 2021, of Technical Board of Appeal 3.5.05:

Key takeaways

- Even if it were to be assumed that a feature (shooting a

subject) in itself was a common measure, its use in the prior art

may not be obvious for the skilled person due to the technical

differences between the prior art

- Even if it is considered that obtaining and projecting an image is an obvious measure and that the image provided is only a different presentation of a virtual agent to the user with no technical effect, implementing the image-obtaining and image-projecting method in the prior art would necessitate structural modifications of the device of the prior art which exceed the general design capabilities of the skilled person

The invention

The invention refers to a process for making an audio-visual interface. It aims to provide an interactive real human being (filmed and not generated with computer graphics), and later reproduced with a three-dimensional effect to increase the visual involvement.

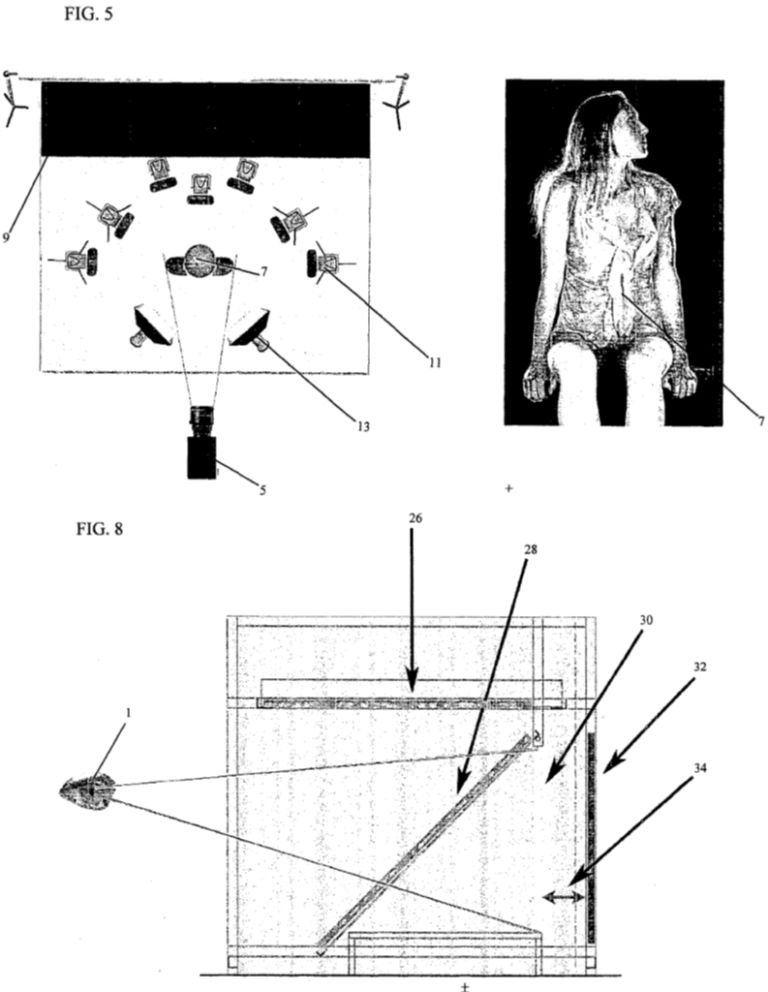

For this, the invention shoots the subject with a shooting machine of specific technology, such that images of the subject are obtained with a specific requirement with the "edge cutting" effect, as in Fig, 5. These recorded images are then reproduced on a plane made of transparent polycarbonate placed at 45° as in Gif. 8, using specific method steps.

Fig. 5 and 8 of EP 2 965 172 A1

Claim 1 (main request)

Process for making an audio-visual interface which reproduces a complete interactive human being as a chosen subject (7), the process, comprising the steps of:

– interactivity analysis;

– image acquisition; and

– reproduction, through an optical or television system with voice, gesture, logic interaction capabilities,

wherein the step of interactivity analysis comprises the sub-steps of: using an optical apparatus which reproduces with three-dimensional effect the human being, equipped with a sensor/controller (3) capable of performing a voice recognition, able to interpret the human language, the interpretation of the human language allowing to identify the information semantics; triggering the appropriate event depending on the received voice command, communicating with a computer through an interface to connect external devices; perceiving the presence, absence and related status change, and a series of movements by a viewer/user (1), and

wherein the chosen subject (7) recites all provided segments, namely ACTION, and a series of non-verbal actions, namely IDLE, PRE-IDLE and BRIDGE, which provide the interface with a presence effect due to the simulation of the human behavior during the dialogue, the visual sequences to shoot being segmented according to an interactivity logic to obtain a sensation of talking with a human being, the logic sequences being divided into four main categories: 1) IDLE sequence (indifference status in which the interface "lives" waiting for a status change command); 2) PRE-IDLE sequence (a behavior which occurs before the action); 3) BRIDGE sequence (an image transition which helps giving visual continuity to the interface between the IDLE/PRE-IDLE status and the ACTION status); and 4)ACTION sequence (in which the interface performs the action or makes the action performed), wherein:

– the step of image acquisition provides for the use of a cinematographic technique comprising the sub-steps of: shooting the subject (7) with a high-definition digital shooting machine (5) with scanning interlaced in a vertical, instead of horizontal, position, on the digital shooting machine (5) oscillating tilt and shift optics being mounted to obtain field depth according to the Scheimpflug rule or condition, which states that, for an optical system, the focal plane generated by the objective and the focal plane of the subject (7) meet on the same straight line; obtaining images of the subject (7) with widespread and uniform illumination on the front to obtain a general opacity of the subject (7) and an illumination of well accurate side cuts and backlights to draw the subject (7) on its whole perimeter to increase the three-dimensionality effect and have well defined edges detached in an empty space, the subject (7) being under total absence of lighting in the areas surrounding his edge, thereby obtaining the "edge cutting" effect necessary for reproducing the images;

– the process further comprises the sub-steps of projecting the image from a LED monitor matrix (26) in a reverse vertical position on a plane made of transparent polycarbonate (28) placed at 45° with respect to the matrix (26);

– the step of reproduction comprises the sub-steps of: providing an optical reproduction system adapted, through the reflection of images generated by a LED monitor matrix (26) or other FULL HD source, to have the optical illusion of the total suspension of an image in an empty space; reflecting (28) the reproduced images on a slab made of transparent polycarbonate; providing a final image perceived by the viewer (1) on a vertical plane (30) behind the polycarbonate slab, completely detached from a background (32), with which a parallax effect (34) is formed; and

– the process comprises the step of activating

the interface for controlling external peripherals outside the optical reproduction system namely domotic peripherals, DMX controlled lights systems for shows or events, a videoprojection of an audio-visual contribution on an external large screen or any other electric or electronic apparatus equipped with standard communication protocols, comprising the steps of:

providing a reproduction (IDLE) of the human being in a standby status;

detecting the presence of one or more users through an input of data provided by the volumetric sensor;

in case of negative input, namely no users are present, going back in a loop to the reproduction of the IDLE status;

in case of positive input, namely users are present, reproducing the PREIDLE sequence and the ACTION sequence with a request to make a control request of the peripheral, possibly showing a list of possible choices;

performing a verbal input by the user;

performing a semantic analysis of the sentence and a logic comparison, possibly executing updated commands by the peripheral;

if the execution is not possible, reproducing the ACTION sequence with a request of inputting again a command on the peripheral;

if the execution is possible, visually executing the BRIDGE sequence and the ACTION sequence in which the interface describes and confirms the execution of the current command and going back to the initial IDLE status;

simultaneously, providing an output through a suitable communication protocol with the peripheral, in which the peripheral is actuated and updated in its status

Is it patentable?

The first-instance Examining Division found that the subject-matter of claim 1 lacked an inventive step over the disclosure of D1 (US 7 844 467) and common general knowledge evidenced by documents D2 (US 2011/007079), D3 (WO 2009/155926), and D5 (US 2011/304632.

Document D1 discloses a user interface for a hand-held device wherein a virtual agent based on computer-generated animated images and voice is presented to the user on the interface's display. It aims to design the virtual agent as "human" as possible and controls the virtual agent's facial movements based on stored video recordings of human speakers. The distinguishing features were identified as follows:

2. It was common ground in the oral proceedings that D1 is to be considered, as in the impugned decision, as the closest prior art to the subject-matter of claim 1.

The board agrees with the decision in point 9.1 that at least the following features A to D of claim 1 are not disclosed in D1:

A: – shooting the subject with a shooting machine with scanning interlaced in a vertical (instead of horizontal) position on the digital shooting machine, oscillating tilt and shift optics being mounted to obtain field depth according to the Scheimpflug rule or condition, which states that, for an optical system, the focal plane generated by the objective and the focal plane of the subject meet on the same straight line;

B: – obtaining images of the subject with widespread and uniform illumination on the front to obtain a general opacity of the subject and an illumination of well accurate side cuts and backlights to draw the subject on its whole perimeter to increase the three-dimensionality effect and have well defined edges detached in an empty space, the subject being under total absence of lighting in the areas surrounding its edge, thereby obtaining the "edge cutting" effect necessary for reproducing the images,

– projecting the image from an LED monitor matrix in a reverse vertical position on a plane made of transparent polycarbonate placed at 45° with respect to the matrix, the step of reproduction comprising the sub-steps of:

– providing an optical reproduction system adapted, through the reflection of images generated by an LED monitor matrix or other FULL HD source, to have the optical illusion of the total suspension of an image in an empty space,

– reflecting the reproduced images on a slab made of transparent polycarbonate,

– providing a final image perceived by the viewer on a vertical plane behind the polycarbonate slab,

completely detached from a background, with which a parallax effect is formed;

C: – detecting the presence of one or more users through an input of data provided by the volumetric sensor;

D: – if the execution is not possible, reproducing the ACTION sequence with a request of inputting again a command on the peripheral.

Moreover, the board agrees with the appellant that the following feature is not disclosed in D1:

E: – activating the interface for controlling external peripherals outside the optical reproduction system, namely domotic peripherals, DMX controlled light systems for shows or events, a videoprojection of an audio-visual contribution on an external large screen or any other electric or electronic apparatus equipped with standard communication protocols.

These features included specific technical limitations as can be seen above. The Board then assessed the individual features and acknowledged that they contribute to the inventive step.

In particular, the Board disagreed with the first instance Examining Division and considered that the distinguishing features were not merely common knowledge, and even if it were a common measure, its use was not obvious and its implementation exceeded the general design capabilities of the skilled person:

3. As regards feature A, the decision in point 9.2 states that it improves the quality of the captured image by using the common knowledge of the skilled person, as illustrated inter alia by D3. However, the image of the virtual agent displayed on the interface of D1 is not a captured image of a human actor but rather a computer-generated image based on facial features extracted from video recordings of a human actor. Thus, even if it were to be assumed that feature A in itself was a common measure, the board holds that its use in the system of D1 would not be obvious for the skilled person due to the above-mentioned technical difference between the virtual agent images in D1 and those in claim 1.

4. As regards feature B, the decision in point 9.2 asserts that it only defines how the information is presented to the user and thus has no technical effect other than its pure implementation which is in itself straightforward for the skilled person. However, even if it is considered that obtaining and projecting an image as defined by feature B is an obvious measure and that the image provided by feature B is only a different presentation of a virtual agent to the user with no technical effect, the board holds that implementing the image-obtaining and image-projecting method of feature B in the system of displaying a virtual agent of D1 would necessitate structural modifications of the device supporting the interface of D1 which exceed the general design capabilities of the skilled person.

5. As regards feature E, the board notes that D1 does not hint that the virtual agent may be used as an interface for controlling peripherals which are external to the device supporting the interface, such as those listed in claim 1. The virtual agent is described throughout D1 as a computer-generated image which simulates a person listening and answering to a user of the interface. The only connection of the device supporting the interface with an external entity is a connection with a server (144A in figure 4A and 144B in figure 4B) which assists the device in the task of generating the virtual agent behaviour. None of the other cited documents D2 to D5 relates to the controlling of an external peripheral on the part of an interface simulating a human agent.

6. For these reasons, the board holds that the combination of at least features A, B and E with the prior art of D1 would not be considered by the skilled person without the use of hindsight. Thus, the subject-matter of claim 1 involves an inventive step, having regard to the cited prior art. Claims 2 to 4 are dependent claims and, as such, also meet the requirements of Article 56 EPC.

Therefore, claim 1 was considered to involve an inventive step, and the appeal is allowed with an order to grant the patent.

More information

You can read the whole decision here: T 0799/19 (Cinematographic image interface/BLUE CINEMA) of October 11, 2021 of Technical Board of Appeal 3.5.05

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.