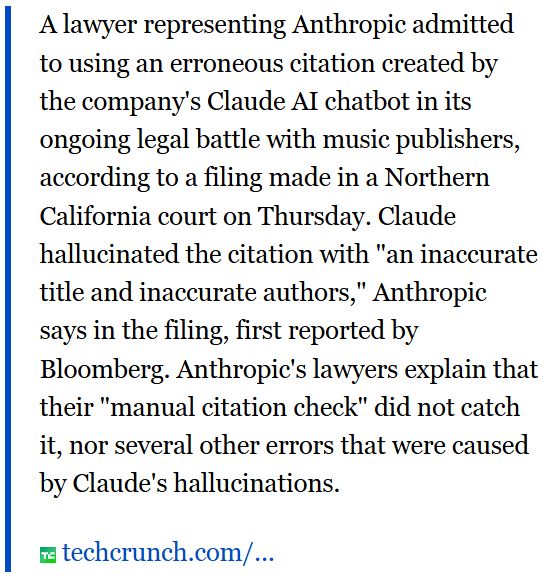

Anthropic is one of the world's leading AI companies through its LLM Claude. Latham & Watkins is one of the world's leading law firms. Mix them together, and you end up with today's latest cautionary tale of over-reliance on AI by lawyers. While representing Anthropic in one of many pending cases challenging AI companies' use of copyrighted materials, Latham apparently relied on Claude to create citations for a report submitted by its expert. Unfortunately, Claude hallucinated the citations, leading Latham and the expert to cite a nonexistent academic article. After the music publishers suing Anthropic noticed the invented citation, Latham was forced to cop to the error, submitting a declaration stating that while there was a real report their expert relied upon, Claude created a fake citation rather than providing an accurate one. Oops!

As AI continues to proliferate, I will repeat my mantra of "don't trust and definitely verify" every output it produces. With my BSF colleagues, I am litigating several cases against other AI companies, and you can be sure we will check every citation to make sure Llama or ChatGPT aren't trying to pull a fast one.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.