- within Transport topic(s)

States are stepping in to regulate AI amid an absence of federal legislation to address fast-changing developments with the technology.

This spring, Colorado became the first state to pass a comprehensive piece of AI legislation that cuts across industries and various aspects of AI usage. Colorado's law zeros in on high-risk AI systems — or those that make "consequential decisions" that could affect consumers' ability to apply for a job, loan, or many other services.

Although other states might follow Colorado's lead and adopt broad legislation, most have taken a more targeted approach to regulating AI. Their efforts have focused on regulating specific applications of AI and industries.

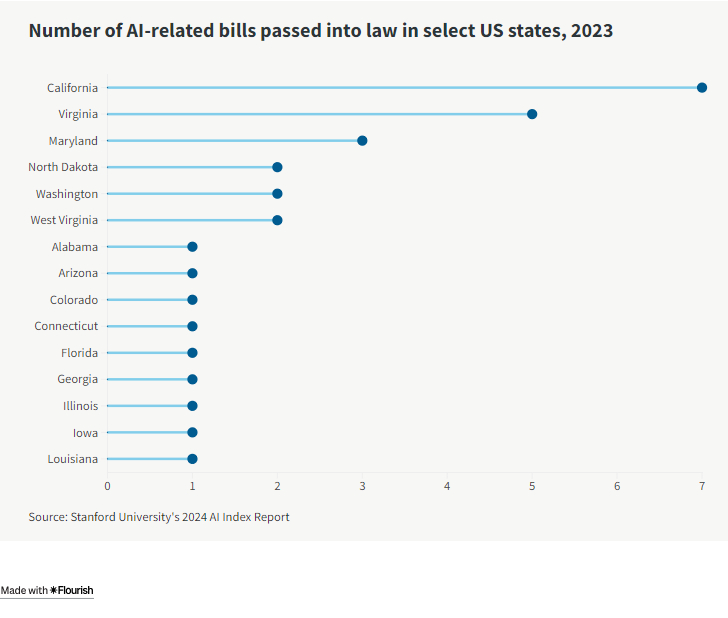

More than 40 states have introduced AI-related legislation this year, according to the National Conference of State Legislatures. Large states, including California, can set standards that companies nationwide can follow to avoid the complexity of complying with multiple regulations. These standards also sometimes serve as models that other states turn to when crafting their own legislation.

Here is a breakdown of how states are approaching AI regulation amid the surge in AI innovation and investment over the past two years:

Industry-Specific AI Regulations

State legislatures are zeroing in on industries including healthcare, insurance, and technology, with goals ranging from protecting patients to eliminating bias and discrimination.

- Healthcare: California proposed a bill in February requiring licensed physicians to monitor AI tools that are used to determine a patient's medical treatments. The bill, called SB 1120, was introduced to ensure that AI serves as a tool to aid — but not replace — a human physician's nuanced understanding of a patient's medical history and treatment needs.

- Insurance: Rhode Island, Louisiana, and New York floated bills aimed at preventing biased outcomes in insurance decisions. New York's bill specifically focuses on preventing discrimination when AI helps determine insurance rates.

- Tech: Some legislative efforts are directed at AI

developers. For instance, California's SB 1047 would require

that AI developers implement several safety measures before

training their AI models. These include ensuring that their models

can fully and quickly shut down, as well as developing a process to

test whether their models might cause cyberattacks that result in

large financial losses.

California's legislature recently passed SB 1047, and the bill now proceeds to California Governor Gavin Newsom's desk for either final approval or a veto. If enacted, SB 1047 could influence AI rules in states across the country, given the large number of AI companies that do business in California, as well as the state's ability to set legal precedents.

Regulations Targeted at Applications of AI

State legislatures are targeting specific uses of AI, with content creation, consumer interactions, and recruitment emerging as common focus points.

- Content creation: Tennessee's Ensuring Likeness Voice and Image Security (ELVIS) Act, which took effect July 1, protects for the first time an individual's voice as a personal right. The law protects recording artists against AI-generated voice clones, which mimic a musician's voice and could mislead the public. It updates an existing law that protects an artist's name, image, and likeness.

- Consumer interactions: The Utah AI Policy Act (UAIPA), which became effective May 1, requires businesses to disclose to consumers when they are engaging with generative AI tools such as chatbots. The UAIPA also creates an office of AI policy to oversee a so-called regulatory sandbox that provides companies with up to two years of relaxed regulations while they develop AI systems.

- Recruitment: New Jersey proposed a bill earlier this year that would require companies using AI in the hiring process to notify job applicants if AI evaluates their video interviews. Applicants must be able to opt out of AI-enabled video interviews.

Integration of AI Regulation into Consumer Privacy Laws

In recent years, several states have passed consumer privacy laws that regulate how companies can use automated systems to analyze consumers' personal data. These laws often cover systems that make decisions using AI, even if they don't specifically mention AI.

For instance, the Virginia Consumer Data Protection Act, which went into effect in January 2023, lays out rules regarding profiling — the automated processing of personal data that businesses draw from to make predictions about consumers. Specifically, Virginia's law allows consumers to opt out of profiling that can affect their ability to access loans, jobs, and housing, among other services.

Oregon's consumer privacy law, which went into effect in July, follows a similar framework to Virginia's.

Colorado's More Comprehensive Approach

Colorado enacted a law this spring that aims to protect consumers from bias and discrimination in AI systems. The Colorado AI Act, set to take effect in 2026, resembles the EU AI Act, which is known as the world's first AI regulation. Both laws are broad, applying to developers and deployers of AI systems used for a range of purposes.

Like the EU AI Act, the Colorado AI Act focuses on regulating high-risk AI systems. The Colorado law defines such systems as those that make "consequential decisions" relating to opportunities and services such as lending, medical care, and employment.

The Colorado law requires developers to document and share information on high-risk AI systems—including inappropriate uses—with deployers or other developers. It mandates that deployers inform consumers when high-risk AI systems are used to make decisions that might, for instance, affect consumers' ability to get a loan.

The Colorado AI Act's emphasis on regulating high-risk AI systems could guide other states in identifying which AI applications to prioritize for regulation. Indeed, though the Colorado AI Act is the first state-level comprehensive AI law, it likely won't be the last, particularly amid an absence of federal AI legislation.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.