- within Law Department Performance and Strategy topic(s)

AI governance is a critical area of focus for organisations. It can comprise many components, some of which may be more mature than others within your organisation.

Many have established AI governance frameworks, usage policies, and risk policies. Challenges in implementing AI include data privacy and security, budget and resource constraints, and legal compliance or liability concerns. Organisations are also preparing for the impact of the EU AI Act, with varying levels of preparedness and anticipated impact.

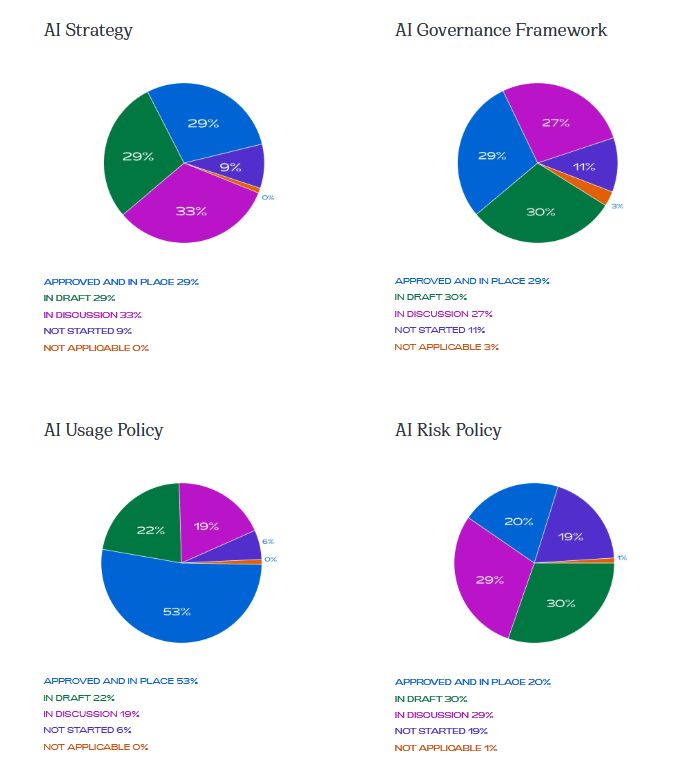

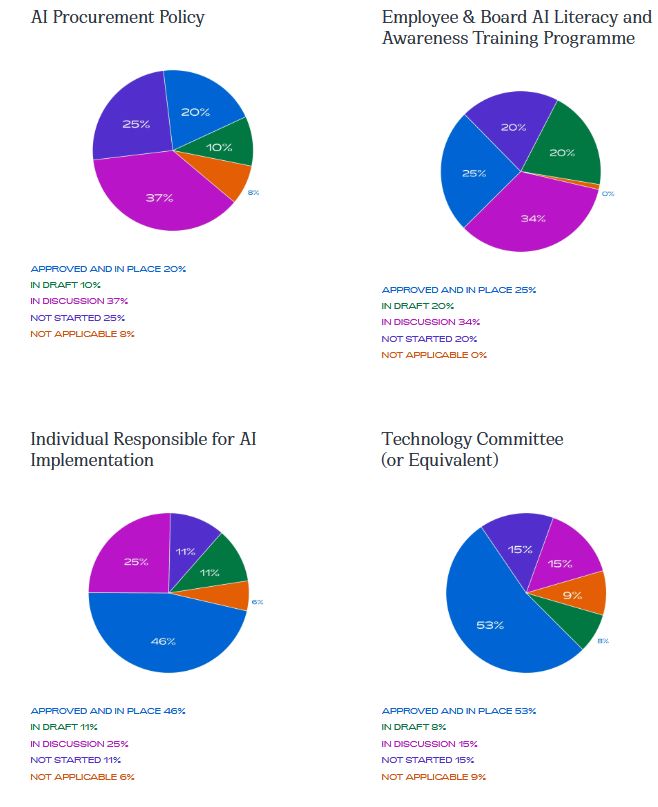

The survey results reveal a varied landscape in the development and implementation of AI governance structures across organisations. While over half of respondents have an AI Usage Policy (53%) and a Technology Committee or equivalent (53%) already in place, other foundational elements such as AI Risk Policies and AI Procurement Policies are still in earlier stages of development or discussion. Notably, only 20% have a risk policy fully approved, and only 20% have a procurement policy in place.

Encouragingly, nearly half (46%) have designated an individual responsible for AI implementation, signalling growing accountability. However, employee and board-level AI literacy training remains a work in progress, with only 25% having such programmes approved.

These findings underscore the uneven maturity of AI governance and highlight the need for more comprehensive and coordinated efforts as organisations scale their AI capabilities.

This article contains a general summary of developments and is not a complete or definitive statement of the law. Specific legal advice should be obtained where appropriate.