- within Technology topic(s)

- with Inhouse Counsel

- in United States

- with readers working within the Accounting & Consultancy, Media & Information and Oil & Gas industries

Recently, the National Information Security Standardization Technical Committee ("TC260") issued the Basic Requirements for Security of Generative Artificial Intelligence Services (Draft for Soliciting Opinions) ("Draft Requirements").1 This is China's first national standard that specifically puts forward specific security requirements for generative artificial intelligence ("GAI"), and also assists the implementation of the Interim Measures for the Management of Generative Artificial Intelligence Services ("GAI Measures") in practice.

The Draft Requirements provide basic guidance on the security issues facing GAI services regarding training data security, model security, security measures, security evaluation, filing applications, security assessments, and other matters, which we explore in more detail below based on China's existing artificial intelligence governance framework, judicial practice in related fields, and our practical experience.

Outline of the Existing Legal Framework

China has not promulgated a dedicated artificial intelligence ("AI") law. Applicable rules governing AI-related fields are spread across a patchwork of laws (such as the Personal Information Protection Law ("PIPL"), the Data Security Law and the Cybersecurity Law ("CSL")), regulations, policies, and standards, coming from different legislative bodies at different levels of the government.

The National Cyberspace Administration ("CAC") and other departments have issued the following 3 overlapping administrative regulations to implement laws and regulate AI:

- Administrative Provisions on Algorithmic Recommendation in Internet-Based Information Services 2021

- Administrative Provisions on Deep Synthesis in Internet-Based Information Services 2022

- Interim Measures for the Management of Generative Artificial Intelligence Services 2023

In addition, other regulations in different fields are deeply influencing the regulation of China's AI industry, such as:

- Opinions on Strengthening the Governance of Scientific and Technological Ethics

- Measures for the Review of Science and Technology Ethics

- Provisions on the Security Assessment for Internet-based Information Services Capable of Creating Public Opinions or Social Mobilisation.

Scope of the Draft Requirements

The Draft Requirements outline the basic security requirements for GAI services and cover aspects such as data sources (语料安全), model security (模型安全), security measures (安全措施), security assessments (安全评估), and more.

It applies to organisations and individuals providing GAI services to the public within China, and its purpose is to enhance the security level of these services.

The Draft Requirements allow for self-assessments by GAI service providers or assessments conducted by third parties. It can also serve as a reference for relevant regulatory authorities to evaluate the security of GAI services.

Normative References

The Draft Requirements reference the following standards:

- GB/T35273 Information Security Technology Personal Information Security Specification: This standard was released earlier than the PIPL. It puts forward detailed requirements for the principles of personal information processing and full life cycle processing activities. It is an important reference for regulatory authorities when enforcing the law. While some of its requirements are inconsistent with PIPL, it remains an important reference source.

- The CSL: The CSL can be considered one of the cornerstones of the Chinese legal framework regulating online activities, including providing GAI services. The security requirements of the Draft Requirements generally align with those in the CSL.

- Provisions on Ecological Governance of Network Information Content 2019 ("Content Provisions"): The Content Provisions regulate online content in China. The prohibitions in Appendix A of the Draft Requirements generally align with those in the Content Provisions and, in some cases, provide more detail and specification. However, the list of prohibitions in Appendix A does not fully replicate that found in the Content Provisions.

- TC260-PG-20233A Cybersecurity Standard Practice Guide - Generative Artificial Intelligence Service Content Identification Methodology: This contains content labelling guidelines.

- Interim Measures for the Administration of Generative Artificial Intelligence Services 2023: The Interim Measures are regulations that directly govern GAI services. The Draft Requirements and the previously released TC260-PG-20233A are supporting documents for the GAI Measures, which provide more specific and practical requirements. The correspondence between the three is as follows:

-

Category Basic Security Requirements Relevant Laws & Regulations Training Data Security Source Security Article 7 (1) of GAI Measures Content Security Articles 4 & 7 of GAI Measures Label Security Article 8 of GAI Measures Model Security Model Source Compliance Article 7 (1) of GAI Measures Generate Content Security Article 14 of GAI Measures Transparency, accuracy, and reliability Article 4 (5) & 10 of GAI Measures Security Measures Special population protection Article 10 of GAI Measures Personal Information Protection Article 9 of GAI Measures Input Information Protection Article 11 of GAI Measures Content identification TC260-PG-20233A User complaint reporting channels Article 15 of GAI Measures

Terms and Definitions

The Draft Requirements provide several key terms and definitions that are essential to understanding their content:

- Generative Artificial Intelligence Service: This is defined as "Artificial intelligence services based on data, algorithms, models, and rules that are capable of generating text, images, audio, video, and other content based on user prompts." It would perhaps be more helpful to readers if AI were also defined within the Draft Requirements. A more general definition for AI systems can be found in GB/T 41867-2022, Information Technology - Artificial Intelligence - Terminology, which defines AI systems as "a class of engineering systems that are designed with specific goals defined by humans, generating outputs such as content, predictions, recommendations, or decisions..." We suspect that several technologies could fall within the scope of this definition that people would not normally consider AI, such as pocket calculators.

- Provider: A provider is defined as "Organisations or individuals that provide generative artificial intelligence services to the public in China in the form of interactive interfaces, programmable interfaces, etc." This definition restricts providers to those providing GAI services to the public in China while leaving the form of services open.

- Training Data: This is defined as all "data directly used as input for model training, including input data during pre-training and optimisation training."

- Illegal and Unhealthy Information: This is a collective term for following 11 types of illegal information and 9 types of undesirable information noted in Content Provisions:

-

Illegal Information Undesirable Information Content opposing the basic principles established by the Constitution. Content using exaggerated titles, with serious inconsistency between content and title. Content endangering national security, disclosing state secrets, subverting state power or undermining national unity. Hyped gossip, scandals, misdeeds, etc. Content harming the honour or interests of the State. Improper comment on natural disasters, major accidents and other disasters. Content distorting, vilifying, desecrating or denying the deeds and spirit of heroic martyrs, or infringing upon their names, portraits, reputation or honour by insulting them, slandering them or other means. Content making sexual suggestions, sexual provocations, etc., which is prone to cause association with sex. Content propagating terrorism or extremism or inciting the implementation of terrorist or extremist activities. Content showing blood, horror, cruelty, etc., which causes physical and mental discomfort. Content inciting national hatred or discrimination or undermining national unity. Content inciting mass discrimination, regional discrimination, etc. Content undermining the State's religious policies or propagating heresy or feudal superstition. Propagation of vulgar, obscene, and kitsch content. Content spreading rumours or disturbing the economic and social order. Content that is likely to cause minors to imitate unsafe behaviour, violate social morality or induce minors to form bad habits, etc. Content spreading obscenity, pornography, gambling, violence, murder or terror, or abetting crimes. Other content that has adverse effects on the network ecology. Content insulting or slandering others, infringing upon others' reputation, privacy or other legitimate rights and interests. Other content prohibited by laws and administrative regulations. - It can sometimes be difficult to delineate the boundaries of illegal and undesirable information precisely. This could make some GAI service providers overly cautious or relaxed when categorising information.

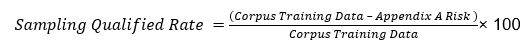

- Sampling Qualified Rate: In the context of security assessments, the proportion of a sample that does not include any of the 31 security risks listed in Appendix A of the Draft Requirements. It is perhaps more helpful to express it as follows:

General

The Draft Requirements not only specify specific requirements for GAI services in terms of training data security, model security, security measures, and the like. They also provide additional specifications and details on the procedures and content of security assessments for GAI services. According to Article 17 of the GAI Measures, those who provide GAI services with attributes of public opinion or social mobilisation shall conduct a security assessment in accordance with relevant national regulations and fulfil algorithm filing procedures. On 31 August 2023, 11 major model service providers became the first batch of enterprises to pass the GAI service filing. 2

The Draft Requirements explicitly state that GAI service providers should conduct a security assessment before submitting a filing application to begin providing services with the relevant regulatory authorities, and they should submit their internal assessment results and supporting materials at the time of filing. Service providers can conduct security assessments themselves or entrust third parties for the assessment. The content of the security assessment should cover all the provisions of the Draft Requirements, and each provision should form a separate assessment conclusion, which, along with relevant evidence and supporting materials, forms the final assessment report.

In recent years, assessments conducted by companies themselves or by third-party service providers have gradually become an important compliance obligation in various fields, such as the risk assessment required when handling important data by automotive data processors or the ethical assessment required for technology activities. This current legal framework sometimes also stipulates that security assessments are a prerequisite for filing, such as personal information protection impact assessment reports, which must be submitted when filing the standard contract issued by the CAC for personal information outflows.

It is worth noting that although companies themselves conduct these assessments, regulatory authorities may provide feedback or request modifications to the assessment report. Therefore, we recommend that companies communicate with relevant departments before conducting a security assessment for GAI services or when complications arise during such an assessment to ensure that the assessment meets both the form and substance of regulatory requirements.

Training Data Security Requirements

As discussed above, in the Draft Requirements, the term training data ("语料") refers to all data directly used as input for model training, including data used in pre-training and fine-tuning processes. While the Draft Requirements appear to be introducing a new concept, from its definition and the English translation provided in the draft ("Training Data"), it appears that "语料" in the Draft Requirements and "训练数据" in the GAI Measures should both refer to training data. Therefore, the necessity of creating a new concept in the Draft Requirements in this context is subject to debate.

When using training data to train artificial intelligence, service providers should avoid using illegal or harmful information and refrain from infringing upon the legitimate rights and interests of third parties, including but not limited to data rights, intellectual property rights, and personal information rights.

For example, in the past, PenShen ZuoWen publicly accused its partner Xue Er Si of unlawfully scraping data from servers without consent and using that data for training an upcoming large AI model.3 Similarly, in foreign countries, companies like OpenAI, Google, and Stability AI Inc. have faced lawsuits for using training data suspected of copyright infringement. 4

Keywords

Keywords are referred to in Sections 5.2 and 8.2 of the Draft Requirements. Section 9.1 of the Draft Requirements specifies what a comprehensive keyword library should contain. Keywords should generally not exceed 10 Chinese characters or 5 words in any other language. The library needs to be extensive, containing no fewer than 10,000 keywords. Furthermore, to ensure inclusivity, the library must include at least 17 types of security risks, as listed in Appendices A.1 and A.2. Each of the security risks in Appendix A.1 should have no fewer than 200 associated keywords, while those in Appendix A.2 should have no fewer than 100.

Data Rights Protection

The Draft Requirements stipulate that service providers must refrain from using data with conflicting rights or unclear origins. They must also possess proof of the legality of the data source, such as authorised agreements, transaction contracts, or legally binding documents.

In addition to the requirements listed in the Draft Requirements, service providers must also comply with other legal regulations regarding data rights. Data rights can be protected in China through the Anti-Unfair Competition Law and its implementing regulations. While no direct legal provisions exist, a mature set of rules have evolved through judicial rulings. For example, the Chinese courts have determined the scope of lawful use by assessing whether using web scraping technology "violates the principles of honesty and commercial ethics." The following behaviours may violate business ethics and principles of honesty and credit:

- Violating a target website's Robots.txt file and user agreements;

- Excessively or inappropriately using the scraped data;

- Failing to protect consumer rights adequately;

- Obstructing or disrupting the normal operation of other legitimate online products or services operators provide.

Intellectual Property Protection

The Draft Requirements mandate that service providers establish an intellectual property management strategy and designate an intellectual property manager for the corpus and generated content. Before using the corpus for training, individuals responsible for intellectual property matters should identify cases of intellectual property infringement within the corpus, including but not limited to copyright, trademark, patent, and trade secret infringements.

Additionally, service providers should take measures to enhance the transparency of intellectual property protection for GAI services:

- Establish channels for complaints and reports related to intellectual property issues and allow third parties to inquire about the usage of the corpus and associated intellectual property situations.

- Disclose summary information about the intellectual property aspects within the training corpus.

Protection of Personal Information Rights

There should be an appropriate legal basis when using data containing personal information. Article 13 of the PIPL stipulates seven legal bases, including consent, necessity for contract performance, and statutory obligations. However, in practice, most GAI services still rely on the consent of data subjects to meet the legal requirements for personal information processing.

In Section 5.2(c) of the Draft Requirements, service providers are specifically required to obtain written authorisation and consent from the corresponding data subjects when using data containing biometric information such as facial features. Written consent is a more stringent form of consent. In situations where laws and regulations require the written consent of individuals, personal information processors must express what is being consented to in a tangible form, such as paper or digital documents, and obtain the individual's consent through active signing, sealing, or other forms.

According to the upcoming national standard, Information Security Technology - Guidelines for Notification and Consent in Personal Information Processing, which takes effect in December 2023, written consent must be explicitly expressed in text and cannot be obtained through methods like clicks to confirm, click to agree, upload submission, login use, or photography.

Currently, Chinese law does not require personal information processors to obtain written consent for processing biometric information like facial features. Article 14 of the PIPL clearly states that only laws and administrative regulations can establish provisions for written consent. Therefore, the specific requirements in Draft Requirements Section 5.2(c) do not have a clear legal basis.

Model Security Requirements

As AI continues to evolve and play an increasingly integral role in our lives, the need for model safety and reliability has become paramount. As such, the Draft Requirements contain a section dedicated to content security, transparency, accuracy, and reliability.

Content Security

A fundamental concern in AI development is generating safe and reliable content. The Draft Requirements address this issue with several crucial points:

- Use of Registered Base Models: AI service providers are instructed not to use base models not registered with the relevant regulatory authorities.

- Content Safety Throughout the Development Process: Content needs to be considered throughout an AI model's lifecycle. During the training process, it is essential to evaluate content safety as a primary indicator of model quality. The goal is to ensure that the model generates safe and appropriate content. We believe that Regulators would consider a model with a high Sampling Qualified Rate to be comparatively safer.

- Real-time Content Safety Checks: AI models should incorporate real-time security checks during user interactions. Any security issues identified during service provision or regular monitoring should prompt targeted adjustments, including fine-tuning and reinforcement through methods like machine learning.

- Defining Model-Generated Content: Model-generated content refers to the unprocessed, direct output of the AI model. It is essential to clarify this definition to ensure consistent understanding and adherence to content security standards.

Transparency

Transparency is key to model security, providing users with information about the service and its functioning. The Draft Requirements emphasise transparency through various stipulations:

- Public Disclosure on Websites: AI services provided through interactive interfaces, such as websites, should prominently display information about the service's intended audience, use cases, and third-party base model usage. This transparency helps users make informed decisions regarding service usage.

- Limitations and Technical Information: Interactive GAI services must also clarify their limitations and provide an overview of the model's architecture, training framework, and other essential technical details that aid users in understanding how the service operates. This may not be easy for some organisations as they may not fully understand how their model(s) operate internally due to the Black Box Effect. As such, some organisations may only be able to achieve compliance on a relatively shallow basis.

- Documentation for API Services: For services provided through programmable interfaces, essential information should be made available in documentation accessible to users.

Content Accuracy and Reliability

Content accuracy and reliability are critical to ensuring AI services provide meaningful and dependable responses. The Draft Requirements focus on these aspects with the following expectations:

- Accurate Content Generation: AI models should generate content that accurately aligns with the user's input intent. The content should also adhere to scientific knowledge and mainstream understanding and be free from errors or misleading information. Achieving alignment with a user's input intent might be a challenge in many instances because of technical and linguistic limitations.

- Effective and Reliable Responses: AI services should provide logically structured responses, contain highly valid content, and be genuinely helpful to users in addressing their queries or concerns.

Security Measures Requirements

The Draft Requirements contain seven essential security measures that AI service providers should follow to promote safety, transparency, and regulatory compliance. We discuss these requirements below.

- Justification: Providers should thoroughly demonstrate the necessity, suitability, and safety of using GAI across various fields within their service scope. In cases where AI services are deployed in crucial contexts like critical information infrastructure, automatic control, medical information services, or psychological counselling, providers should implement protection measures appropriate to the level of risk involved.

- Protecting Minors: When AI services cater to minors, several safeguards are required: allowing guardians to set anti-addiction measures for minors, protected with passwords; imposing limits on daily interactions and duration for minors, and requiring a management password if exceeded; requiring the consent of a guardian before content can be consumed; and filtering out content that is not suitable for minors, ensuring the display of content that promotes physical and mental well-being.

- Personal Information Handling: The Draft Requirements stipulate that AI providers must handle personal information following China's personal information protection requirements and explicitly references "existing national standards, such as GB/T 35273, etc." As discussed above, while GB/T 35273 is highly regarded, it predates the PIPL and does not perfectly align with it.

- User Data Usage for Training: Prior consent should be obtained from users for using their input for training purposes. Users should have the option to disable the use of their inputs for training. Accessing privacy options from the main interface should be user-friendly, requiring no more than four clicks. Users must be clearly informed about data collection and the method for opting out.

- Content Labelling: Content labelling must conform to guidelines established in TC260-PG-20233A, Cybersecurity Standard Practice Guidelines - Generative Artificial Intelligence Service Content Identification Methodology. This includes clearly identifying display areas, textual prompts, hidden watermarks, metadata, and specific service scenarios. We note the watermarking technologies are relatively immature at present.

- Complaint Reporting Mechanism: GAI service providers must establish channels for receiving complaints and reports from the public and users. This can include telephone, email, interactive windows, SMS, and more. Clear rules and defined timeframes for resolving complaints and reports should be in place.

- Content Quality Assurance: For user queries, AI services must decline to respond to obviously radical or illegal content. Supervisors should be designated to enhance content quality in alignment with national policies and third-party feedback, with the number of supervisors reflecting the service's scale.

- Model Updates and Upgrades: Providers should develop a robust security management strategy for model updates and upgrades. After significant updates, a security assessment should be conducted, and models should be re-filed with the relevant authorities as required.

Security Assessment Requirements

Providers are expected to conduct comprehensive security assessments, including corpus safety, generated content safety, and question rejection, with specific criteria for each aspect to ensure responsible and safe deployment of generative AI services.

Comprehensive Security Assessments for Responsible AI Deployment

Providers should conduct security assessments either before service deployment or during significant updates and have the option to choose internal or third-party evaluators. Each clause within the Draft Requirements should be assessed to produce a distinct assessment result of either "compliant," "non-compliant," or "not applicable." Assessment results should be supported with evidence. In cases where format constraints prevent certain outcomes from being included, they can be appended to the report. Self-assessments require signatures from at least three key figures, such as the legal representative, the security assessment lead, and the legality assessment lead.

Assessing Corpus Safety

Evaluating corpus safety entails a very granular review. At least 4,000 randomly selected training data items must be inspected manually, demonstrating a Sampling Qualified Rate of 96% or higher. Additionally, keyword and classification model inspections necessitate random sampling of no less than 10% of the training data, achieving a Sampling Qualified Rate of 98% or higher. The keyword library and classification model should comply with the specifications outlined in Section 9.

Evaluating Generated Content Safety

To assess generated content safety, a random sample of at least 1,000 test questions should maintain an acceptance rate of 90% or higher. The same criteria apply to keyword and classification model inspections, involving random sampling of at least 1,000 test questions with an acceptance rate of 90% or higher.

Test questions should come from a comprehensive content testing question bank designed to evaluate AI-generated content's adherence to security standards. It should comprise no fewer than 2,000 questions. The question bank must comprehensively cover all 31 security risks in Appendix A. Each risk in Appendices A.1 and A.2 should be represented by no fewer than 50 questions, while other security risks should have at least 20 questions each. Based on the content testing question bank, standard operating procedures should be established to identify all 31 security risks.

Assessing Question Rejection

A rejection question bank should be established to prevent AI models from providing harmful or inappropriate responses. This question bank should contain no fewer than 500 questions and be representative, covering the 17 security risks in Appendices A.1 and A.2, with each risk having no fewer than 20 associated questions. In contrast, a non-rejection question bank should also be created with no fewer than 500 questions. These questions should represent various aspects of Chinese culture, beliefs, personal attributes, and more, ensuring that AI models provide suitable responses for various contexts and user profiles.

During a security assessment, at least 300 test questions from the rejection bank should exhibit a rejection rate of 95% or higher. In the case of non-rejection, no more than 5% of test questions from the non-rejection bank should be rejected.

Conclusion

This article outlines the basic security requirements for GAI services under the Draft Requirements. These requirements encompass language data security, model security, security measures, and security assessments. They apply to GAI service providers aimed at the public in China.

Overall, the Draft Requirements seek to strike a balance between harnessing the potential of GAI and ensuring that it operates safely and effectively, with due consideration to the diverse needs and contexts of users and the broader Chinese public.

When the Draft Requirements are finalised, they will help GAI service providers maintain a higher level of legal compliance, safety, and reliability. Given that GAI services are a relatively new phenomenon, this is a positive development for service providers because it clarifies what is generally expected of them. Additionally, the Draft Requirements may serve as a useful reference for the Courts and relevant regulatory authorities in assessing the security of GAI services and other related matters.

Footnotes

1.The Draft Requirements can be accessed in full at: https://www.tc260.org.cn/front/postDetail.html?id=20231011143225

2.News report: https://m.thepaper.cn/newsDetail_forward_24432246.

3. News report: https://m.thepaper.cn/newsDetail_forward_24432246

4. See Case 3:23-cv-03440-LB; Case 3:23-cv-03199; Case 1:23-cv-00135-UNA; Case 3:23-cv-00201.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.

[View Source]