- within Media, Telecoms, IT and Entertainment topic(s)

- in United States

- with readers working within the Advertising & Public Relations and Banking & Credit industries

- within Antitrust/Competition Law, Insurance and Technology topic(s)

1. The DSA in the Portuguese context: Where are we now?

The Digital Services Act ("DSA") is a European Union regulation that is directly applicable in Portugal. The DSA aims to promote a safer, more transparent and responsible online environment by ensuring consumer protection in the digital space.

The DSA was approved by the European Parliament and the Council in 2022, becoming applicable to all providers of intermediary services on 17 February 2024.

On 31 July 2025, the Portuguese Council of Ministers approved a government bill aimed at ensuring the implementation of the DSA. It was then submitted to the Portuguese parliament on 4 August 2025. This is a decisive moment in the transposition and practical application of European rules on intermediary services, emphasising the importance of the DSA in Portugal.

Given the DSA's significant impact on the regulation of digital platforms and providers of intermediary services, it is crucial to grasp its key features and consider the latest developments in Portugal.

The DSA was approved by the European Parliament and the Council in 2022, becoming applicable to all providers of intermediary services

2. What does the DSA consist of, and what are its key features?

The DSA establishes rules to promote innovation and ensure the protection of consumers' fundamental rights in a safe, predictable and reliable digital environment.

The Act affects all companies offering intermediary services in the European Union. This includes not only major technology companies that provide search engines and social networks, but also marketplaces, app stores, and collaborative economy platforms, among others, regardless of their size or where they are established.

The DSA aims to combat the spread of illegal content on the Internet and enforce moderation of transmitted content. It also establishes a set of diligence and transparency obligations, modifying the way the platforms interact with their users. Furthermore, it imposes obligations relating to the protection of minors.

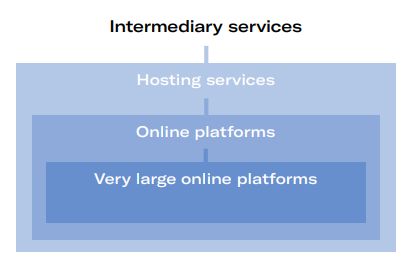

The obligations imposed on providers of intermediary services take into account the size of the intermediary, as well as the different types of providers and the nature of their services.

Due to their large user base, 'very large' 1 online platforms and search engines face stricter obligations as they pose an increased risk of illegal content, goods or services being circulated.

These providers of intermediary services have been subject to the DSA rules since the end of August 2023.

Smaller companies (micro and small enterprises) are exempt from some of the obligations, 2 but are free to apply best practices to benefit from competitive advantages.

In addition to the obligations applicable to each intermediary service provider varying according to their nature and size, the following aspects introduced by the DSA are particularly relevant:

- Mechanisms to act against illegal content: Providers of intermediary services must inform the relevant authority of the action taken, specifying whether and when the order was executed, without undue delay. Providers of hosting services shall also implement systems that enable individuals or entities to report illegal content.

- Protection of minors: Platforms must take appropriate and proportionate measures to ensure a high level of privacy, safety and security of minors. This includes implementing age verification tools and providing support to help them understand the terms and conditions. Online platform providers must not display advertisements on their interface based on profiling using the personal data of service recipients if they know, with reasonable certainty, that the recipients are minors.

- Liability of providers of intermediary services: The DSA mirrors the E-Commerce Directive's rules on conditional exemption from liability for providers of intermediary services. 3 Essentially, the DSA establishes the conditions under which providers of mere conduit, caching and hosting services are exempt from liability for the information they transmit and store. This exemption is contingent on the provider maintaining a neutral position.

- Prohibition of general monitoring obligation: It is illegal to impose a general obligation on providers of intermediary services to monitor the information they transmit or store, or actively seek facts or circumstances indicating illegal activity. However, nothing prevents providers from doing so voluntarily.

- Terms and conditions: Providers of intermediary services must include the following in their terms and conditions: (i) the restrictions applicable to the use of the service, (ii) content moderation policies and measures (algorithmic decisions and human analysis), and (iii) the rules of the internal complaints system This information must be clear, accessible and unambiguous, and available in a machine-readable format. Providers must also ensure that any established restrictions are applied diligently, objectively and proportionately, while respecting the legitimate rights and interests of all parties.

- Statement of reasons: With a few exceptions, hosting service providers must clearly and specifically explain to affected users the reason for their decision to restrict content deemed illegal or in violation of their terms and conditions. This applies when one of the following restrictions is imposed: (i) limiting the visibility of content (e.g. removal, blocking access or demotion); (ii) suspension, termination or restriction of payments; (iii) suspension or termination of the service, in whole or in part; or (iv) suspension or closure of the user's account.

- Transparency reporting: Providers of intermediary services must publish an annual transparency report on any content moderation activities they have participated in during the relevant period.

- Point of contact: Service providers must: (i) designate a single point of contact that allows direct communication with the competent authorities; and (ii) designate a single point of contact that allows service recipients to communicate directly and quickly with those providers electronically and in an easily understandable manner. This should particularly allow service recipients to choose means of communication that do not rely exclusively on automated tools.

- Advertising on online platforms: Depending on their size, online platforms displaying advertisements must ensure that each user can clearly identify the following in real time: (i) that it is advertising, with clearly visible signage; (ii) the entity on whose behalf the advertisement is presented; (iii) who is funding the advertisement, if different; (iv) accessible information about the main targeting criteria and, where applicable, how to adjust them.

- Recommender system transparency: Online platforms that use recommender systems must indicate the main parameters used and the options available to users to change or influence them in their terms and conditions, in plain language.

- Strengthening consumer rights in distance contracts: Depending on their size, online platforms should: (i) allow consumers to access essential information about traders offering products or services, such as their name, address and contact details; (ii) ensure that the interface is designed in such a way as to enable traders to comply with their legal obligations, including providing precontractual information and ensuring product compliance and safety; and (iii) where applicable and where they have the consumer's contact details, inform them about the purchase of illegal products or services.

- Appointment of a European Board for Digital Services: An independent advisory group of digital services coordinators has been established to supervise providers of intermediary services. This group is called the 'European Board for Digital Services'.

2.1. WHO DOES THE DIGITAL SERVICES ACT APPLY TO?

The DSA applies to all providers of intermediary services offering services to EU residents, regardless of where they are established. In short, all EU residents benefit fully from the DSA's protection in relation to these services.

Providers of intermediary services that are not established in the EU will be subject to the DSA's rules if they have a significant number of users in one or more Member States, or if they direct their activities at one or more Member States.

Intermediary services include:

- mere conduit services that transmit a communication network or information provided by a recipient of the service, such as internet service providers (ISPs) and domain name registration services;

- caching services that enable the transmission of information provided by a service recipient, such as proxy servers that temporarily store information to enable fast user access;

- hosting services include cloud computing and web hosting, as well as online platforms such as marketplaces, app stores and social networks. Within this group, there are also very large online platforms.

Additionally, search engines are subject to certain provisions of the DSA. While not expressly classified as intermediary services, some of their functionalities constitute intermediary services (e.g. in advertising).

3. The DSA in the Portuguese context

On 31 July 2025, the Council of Ministers in Portugal approved a government bill to ensure the implementation of the DSA.

The bill establishes common obligations for providers of intermediary services. It also defines a set of rules for combating the dissemination of illegal content, including the identification of specific illegal material and the provision of clear information to enable providers to locate it.

To avoid fragmentation of responsibilities among the relevant national authorities, the bill establishes ANACOM as the competent authority for digital services in Portugal and specifies its powers. The bill also defines the model for cooperation with judicial authorities and other relevant administrative bodies. 4

4. Other recent developments

4.1. THE DSA IN PORTUGAL: ANACOM PRESENTS 2024 ACTIVITY REPORT AND OPENS PUBLIC CONSULTATION ON THE TRUSTED FLAGGERS GUIDE

On 4 July 2025, ANACOM published its Activity Report for 2024 in its capacity as Coordinator of Digital Services in Portugal, as part of the implementation of the DSA.

The Report presents the activities carried out by ANACOM throughout 2024, in the exercise of its powers as Coordinator of Digital Services, specifically in monitoring and supervising compliance with the DSA 5.

On 12 August 2025, ANACOM approved the draft guide for applying for trusted flagger status. 6 This guide aims to assist entities wishing to apply for trusted flagger status, containing guidance on the application process, including instructions for completing the form.

This draft guide is open for public consultation for 25 working days, i.e. until 19 September 2025. Interested parties may submit their contributions, in writing and in Portuguese, to the email address sinalizadores-confianca@anacom.pt.

Article 22 of the DSA establishes the role of trusted flaggers, entities responsible for identifying potentially illegal content and notifying online platforms of it via the flagging mechanisms set out in the Act.

ANACOM is responsible for assigning the status of a trust flag to applicant entities that are established in Portugal and demonstrate compliance with the required cumulative requirements. 7

4.2. AT THE EUROPEAN LEVEL

On 2 July 2025, the European Commission adopted a delegated act setting out the rules that allow authorised researchers to access the internal data of very large online platforms and search engines. 8

The delegated act sets out the legal and technical requirements for authorised researchers to access data from very large online platforms and search engines.

It also establishes a new online portal (the DSA Data Access Portal), through which researchers can contact very large online platforms and search engines, as well as national authorities and Digital Service Coordinators, regarding their requests for access to internal data.

September 2025 will also be a significant milestone in the implementation of the DSA. The Court of Justice of the European Union will deliver rulings on significant cases, thereby reinforcing the pivotal role of European law in the regulation of the digital landscape.

The first decision was handed down on 3 September 2025 in case T-348/23. The court ruled that a marketplace can be classified as a Very Large Online Platform (VLOP) with regard to the activity of third-party sellers, even if it is not classified as such with regard to direct sales made by the platform itself. This conclusion was reached using a method that counted active recipients. In the absence of data that distinguished between users exposed to third-party content and those exposed only to the platform's direct activity, the Commission considered the total number of users, which exceeded 83 million per month — well above the 45 million threshold provided for in the DSA.

The Court also rejected arguments that these rules violate principles such as legal certainty, equal treatment and proportionality. In fact, it was pointed out that marketplaces could facilitate the sale of dangerous or illegal products to a significant proportion of the population of the Union, which fully justifies the application of the enhanced obligations provided for VLOPs.

It is important to closely monitor this and future decisions, as they will establish case law on the interpretation and application of the DSA.

5. What impact will the DSA have and what measures must be taken?

The DSA's impact is already being felt in the European digital market. Online platforms and search engines must comply with new obligations ranging from the faster removal of illegal content to providing users with clear and accessible justifications, including the creation of a public transparency database. Very large online platforms, in particular, face enhanced requirements for assessing, mitigating and reporting on systemic risk, reflecting the European regulator's growing focus on these entities' role in protecting fundamental rights and ensuring the digital ecosystem functions smoothly.

These developments demonstrate that the DSA is being implemented and is having a tangible effect on how services are provided and supervised. As oversight and enforcement mechanisms consolidate and new issues, such as the protection of minors and digital advertising, are addressed, scrutiny of platform compliance is likely to intensify.

These developments demonstrate that the DSA is being implemented and is having a tangible effect on how services are provided and supervised.

In this context, it is crucial for companies to assess the extent to which the DSA applies to them now, and review and update their internal policies and procedures in time for the set deadlines. This is not just a legal obligation; it is also an opportunity to strengthen user confidence and demonstrate alignment with the best regulatory practices in the European digital space.

6. Final observation

The Digital Services Act marks an important milestone in the regulation of the European digital environment, with significant consequences for digital platforms and providers of intermediary services in general.

Its implementation in Portugal, which is currently in the legislative phase, will significantly impact how these providers operate and their accountability to users and national/EU authorities.

The DSA is more than just a regulation; it represents a new paradigm, creating a safer, more transparent and responsible digital environment that ensures the protection of users' fundamental rights without compromising the sector's competitiveness and innovation.

Footnotes

1. Very large online platforms and very large search engines are those whose average users reach or exceed 10% of the EU population, which is equivalent to 45 million users or more. which the Commission designates as such.

2. For example, the Section of the DSA applicable to marketplace platforms will not apply "to providers of online platforms allowing consumers to conclude distance contracts with traders that qualify as micro or small enterprises" (Article 29(1) DSA).

3. Directive 2000/31/EC of the European Parliament and of the Council of 8 June 2000 on certain legal aspects of information society

services, in particular electronic commerce, in the Internal Market , available at EUR-Lex - 32000L0031 - EN - EUR-Lex (Europa.eu)

4. In Portugal, Decree-Law 20-B/2024 designated ANACOM as Coordinator of Digital Services. It also designated as competent authorities the Regulatory Authority for Social Communication (ERC), in the field of media and other media content, and the Inspectorate-General of Cultural Activities (IGAC), in the field of copyright and related rights.

5. Report available here.

6. Draft guide for applying for trusted flagger status, available here.

7. ANACOM is responsible for granting trusted flagger status to any applicant established in Portugal that can demonstrate that they meet all of the following requirements: (i) it has particular expertise and competence for the purposes of detecting, identifying and notifying illegal content; (ii) it is independent from any provider of online platforms; (iii) it carries out its activities for the purposes of submitting notices diligently, accurately and objectively.

8. Available here.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.