- within Technology topic(s)

- with Finance and Tax Executives and Inhouse Counsel

- with readers working within the Banking & Credit, Telecomms and Utilities industries

On 17 December 2025, the European Commission published a draft Code of Practice on Transparency of AI-Generated Content (the "Code"). The Code does not create new legal obligations. Instead, it seeks to translate the transparency obligations under Article 50 of the EU AI Act into concrete technical and organisational measures that providers and deployers may adopt in order to help demonstrate compliance. Below is a summary. Read Section 1 and 2 of the Code for further details.

ARTICLE 50: A REMINDER

Below is a quick reminder of the relevant provider and deployer transparency obligations under Article 50. At the time of writing, these Article 50 obligations will apply from 2 August 2026 (there is a proposal to pause the Article 50(2) provider obligations until 2 February 2027, but this has not yet been approved).

|

Provider Article 50(2) |

If the AI system... generates synthetic audio, image, video or text content |

Ensure that the content is marked in a machine-readable format so that it can be detected as artificially generated or manipulated Exception: unless used to assist editing, or does not substantially alter input data |

|

Deployer Article 50(4) |

If the AI system...generates or manipulates image, audio or video content constituting a deep fake If the AI system... generates or manipulates text which is published with the purpose of informing the public on matters of public interest |

Disclose that the content has been artificially generated or manipulated Exception (for deep fakes): but in evidently artistic or satirical contexts, disclosure is only required in a manner that does not hamper the display or enjoyment of the work) Exception (for public interest text): not required where the content has undergone a process of human review/ editorial control and where a natural or legal person holds editorial responsibility for the publication of the content |

STATUS OF THE CODE

The Code is in draft form. A further draft will be published around March 2026, before a final Code is published in May or June 2026. This timing raises questions as to whether organisations will have sufficient time to implement the Code ahead of the above Article 50 transparency obligations, particularly if the proposed 'pause' does not take effect in time. As a result, despite being a first draft, the Code is of practical relevance for organisations in scope.

The Code is not mandatory. In fact, it is explicitly positioned as a compliance support tool. In practical terms, this means the Code is one optional way of showing how the relevant Article 50 obligations may be met. However, following the Code does not, in itself, guarantee compliance with Article 50. Equally, organisations remain free to comply with Article 50 obligations through alternative measures, without adhering to the Code at all.

PROVIDER OBLIGATIONS IN PRACTICE

MULTI-LAYERED MARKING: METADATA, WATERMARKING AND FINGERPRINTING

Providers are expected to adopt a layered approach, combining different marking techniques, such as:

- including provenance information directly within the content's metadata (where possible, e.g. for images, video files or documents) including information about the AI system used and the nature of the operation performed;

- however, metadata can be lost when content is copied, reformatted or re-uploaded, and so providers are also expected to embed imperceptible watermarks directly within the content itself (particularly for image, video and audio outputs). These marks are intended to survive common processing steps such as compression, resizing or format changes; and

- where neither metadata nor watermarking is reliable (e.g. for text), providers may rely on fingerprinting or logging mechanisms to allow later verification that content originated from a particular AI system.

For multimodal outputs (for example, content combining video, audio and text), providers are expected to ensure that marking techniques are synchronised across modalities. In practice, this means that each component of the output should carry compatible provenance signals, so that AI involvement remains detectable even if one element is altered, removed or replaced.

The Code notes that marking techniques may be implemented at different stages of the value chain, e.g. at model level or through third-party solutions specialising in provenance or transparency technologies. In particular, the Code expects providers of generative AI models to implement machine-readable marking techniques for the content generated or manipulated by their models prior to the model's placement on the market.

PRESERVATION AND NON-REMOVAL OF MARKINGS

Providers are expected to implement measures to ensure that detectable marks and other provenance signals are retained, including where AI-generated or manipulated content is reused or further used as input and transformed by their own AI system. Providers are also expected to discourage deliberate removal or tampering with such markings by deployers or third parties, for example through contractual restrictions (terms of use), acceptable use policies or other documentation accompanying the system or model.

SUPPORTING VERIFICATION AND COMPLIANCE

The Code also envisages that providers support transparency through appropriate verification tools, internal compliance frameworks and training, implemented in a manner proportionate to their size and resources.

DEPLOYER OBLIGATIONS IN PRACTICE

For deployers, the draft Code focuses on how AI-generated or manipulated content is disclosed to end users. Unlike the provider obligations, which focus on technical marking, the deployer obligations are concerned with visible, contextual disclosure.

CONSISTENT DISCLOSURE USING COMMON TAXONOMY AND A COMMON ICON

The Code encourages deployers to use a harmonised approach to disclosure, based on a common taxonomy and a visible icon, to signal AI involvement in deep fakes. At a high-level, deployers should identify and label content as either (as applicable):

- fully AI-generated, (i.e. no human authored element); or

- AI-assisted or AI-manipulated (the Code provides a nonexhaustive list of examples, but this includes AI rewriting or summarising human-created text, adding AI-generated or manipulated content to human authored consent, face/voice replacement, object removal, and beauty filters that change perceived age)

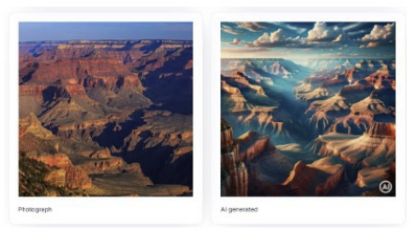

With respect to icons, the Code proposes to develop EU-wide icons for content based on this taxonomy, but until this is finalised, deployers may use an interim "AI" icon (or a languagespecific equivalent). Icons should be placed in a clear and consistent location and visible at the time of first exposure.

Figure: A round icon containing "AI" in the bottom right corner of the AI-generated photo. Source: Centre for AI Safety (CAIS)

EDITORIAL EXCEPTION

The Code also addresses the editorial exception under Article 50(4), i.e. where AI-generated text which is published with the purpose of informing the public on matters of public interest does not need to be disclosed as such where the content has undergone a process of human review/ editorial control and where a natural or legal person holds editorial responsibility for the publication of the content.

To rely on this exception, deployers are expected to be able to demonstrate that the text has not simply been generated and published automatically. The Code suggests that deployers should have internal processes in place to support this assessment, including the ability to identify who reviewed and approved the content, and to show that the review went beyond purely formal or automated checks.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.