- with Senior Company Executives, HR and Finance and Tax Executives

- in United States

- with readers working within the Automotive, Banking & Credit and Insurance industries

Big Data: Legal Challenges (Full Report)

The cost of data storage has plummeted and our computing power to use and analyse data has increased exponentially.

"Big Data" is the term for a collection of data sets so large and complex that they cannot readily be processed with traditional data processing applications in a reasonable amount of time. Attempts to meaningfully define "Big Data" focus on size of the data sets (volume), the real time way in which much of the information is captured by a system (velocity) and the increasing variety of disparate data sets that can be accessed (variety).

Data sets grow in size, in part, because they are increasingly being gathered by ubiquitous information- sensing mobile devices, aerial sensory technologies (remote sensing), software logs, cameras, microphones, radio-frequency identification readers, and wireless sensor networks.

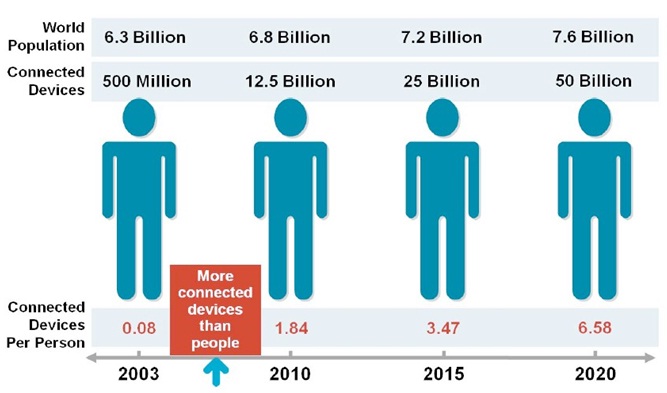

In addition, movement sensors, building access systems, smart power metres, surveillance cameras, smart cars, smart televisions, and all manner of devices now Internet enabled, facilitate the collection and use of information logs. This has led to the emergence of the so called "internet of things", or IOT, referring to the increasing number of connected objects relative to the population. The IOT means more data created at an accelerated rate, which compounds and feeds into the volume, velocity and variety of Big Data sets. The emergence of the IOT is illustrated in the following diagram:

The shift towards consuming computing as a hosted, utility-style service (cloud) has revolutionised the way in which organisations can analyse data. An organisation needing massive amounts of processing power to process complex data queries can ramp up their access to processing power during the project and afterwards scale back that access. This is all possible at steadily declining prices using new models of access to computing power that were not available five years ago.

To illustrate, when scientists first decoded the genome in 2003 it took ten years of work to sequence the three billion base pairs. Now that much DNA can be sequenced in a day4.

There are numerous examples of organisation collecting and harvesting large amounts of data in an attempt to differentiate themselves in the marketplace. Among the more notable:

- Walmart, the world's largest retailer, generates vast quantities of data every day from its online presence and over 10,000 retail stores. It has over 1.5 million customer transactions every hour and a data warehouse with over three petabytes of information. Walmart records every purchase by every customer for future analysis and claims to have driven over US$1 billion in incremental revenue (10-15 percent boost in sales) on decisions resulting from this analysis. Walmart knows, for example, that with any hurricane warning in a given area will be an inevitable increase in the sale of Kellogg's Pop-Tarts.

- The New York Police Department, among others, uses computerised mapping and analysis of variables like historical arrest patterns, paydays, sporting events, rainfall and holidays to try to predict likely crime "hot spots" and deploy police officers there in advance5.

The uses of big data are many and varied, the only constant being that it will underpin much commercial advantage in the post industrial economy. Studies by the World Economic Forum (WEF) have described personal data as a new asset class - the new "oil" for business. The WEF has further predicted the use of big data tools for the better targeting of services, prediction and prevention of crisis, understanding population health and improving health care, reduction of food spoilage and identification of areas in food related distress and tracking the movements and conditions of refugees6. In its 2011 paper entitled "Personal Data: The Emergence of a New Asset Class" WEF noted7:

A McKinsey study has further concluded that Big Data could be the defining basis for success in the post industrial economy. We are already seeing large gaps emerge between the growth of traditional business failing to heed the impact of Big Data on their decisions and those companies embracing the power of Big Data to direct decisions8:

structured and unstructured data

A key feature of the 'big data' era is growth in the volume and variety of unstructured data.

Most traditional databases are 'structured', relational databases - in which each field in a database is known and named and the relationship between each field is defined. This would apply to everything from your bank account and your TV guide to corporate databases such as customer relationship management databases, HR/payroll databases and document management databases.

While 'Big Data' can include structured data in a relational database, in the majority of cases large tracts of data will be stored with no apparent meaning beyond its face value. Skills are required to create meaning from disparate data sets by pursuing inquiries or associations between those data sets. The logs created by a smart power meter, a building's air conditioning system, machine logs and most of the so called "digital exhaust" created by use of the internet will not be stored in a traditional relational database.

Furthermore, much of the relevant data is transient and traditionally not stored after the purpose of its creation has passed (the data of an internet phone call, the watching of a Youtube video), yet increasingly new opportunities and optimised products and services can be created by applying analytical models to these data sources. Such data is generally defined as "unstructured" data as opposed to traditional "structured" data.

Attempts to measure the amount of unstructured or semi-structured data are imprecise, but it is generally accepted to account for 80 to 90 percent of the world's data.

The IDC "Digital Universe" study sought to analyse total enterprise data growth in the period 2005- 20159. By 2015, by far the dominant form of data will be so called "unstructured" data.

Related links

- The data explosion

- The application of traditional legal rights in a big data world

- Can a database be protected by copyright?

- A special case: The European database directive

- Does the law recognise the 'confidential information' contained in a database?

- Can use of a database be protected via a contract or license?

- Big data as a Privacy Concern

- Conclus·ons

Footnotes

4Big Data, supra note 1, page 8

5The Age of Big Data, Steve Lohr, February 11,

2012:

http://www.nytimes.com/2012/02/12/sunday-review/big-datas-impact-in-the-world.html?pagewanted=all&_r=0

6Big Data, Big Impact: New Possibilities for

International Development, World Economic Forum 2012

http://www3.weforum.org/docs/WEF_TC_MFS_BigDataBigImpact_Briefing_2012.pdf

7Personal Data: The Emergence of a New Asset

Class, World Economic Forum, January 2011, pages 5 and 6

8Big Data: The next frontier for innovation,

competition, and productivity, May 2011, James Manyika et

al:

http://www.mckinsey.com/insights/business_technology/big_data_the_next_frontier_for_innovation

9"THE DIGITAL UNIVERSE IN 2020: Big Data,

Bigger Digital Shadows, and Biggest Growth in the Far

East", John Gantz and David Reinsel, December 2012:

http://www.emc.com/collateral/analyst-reports/idc-the-digital-universe-in-2020.pdf

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.