Latency is the delay experienced in an IT system between an input being sent and an output being received and it is becoming a focal issue for IT suppliers and consumers. Anyone who has experienced delays on satellite phone or video calls will be familiar with the frustrations of latency, which can convert fluent conversation into a continual stream of interruptions and silent pauses.

This article will look at why latency is important, provide a high level technical outline and lastly focus on the commercial issues to consider when contracting for hardware, software and connectivity where low latency is of importance. This article will not deal with regulatory aspects of latency.

1. Why is latency important?

The more time sensitive data is, the more important it will be to reduce latency and to date, the need for low latency has arisen most prominently in the financial services sector.

Technology has transformed financial markets as IT systems can process information and execute trading strategies with precision and speed which was inconceivable a few years ago. Financial exchanges were traditionally situated in the centre of glamorous cities, peopled by hordes of animated traders, hustling for business and completing verbal contracts via "open outcry". In many liquid markets today, electronic contracts are matched via software, housed within gently humming racks of hardware, hosted in vast data centres occupied only by a handful of engineers and connected around the globe via a web of fibres. In many cases, even traders at the end of computer screens have been supplanted by software that executes orders either partially or wholly on the basis of computer generated decisions driven by an algorithm.

Technology has enabled new trading methods, which assess all available information almost instantaneously, implement pre-programmed trading strategies and execute the best available transaction, all within milliseconds. This is best exemplified by "high-frequency traders", who create profit by frequently trading and capitalising on extremely short-term opportunities, available often only for a matter of milliseconds. In this way they can continually exploit shifting share prices and profit immediately as prices move by fractions over short periods of time. Not all trading strategies require latency to be reduced but many do and this area of the market is becoming increasingly important in terms of trading volumes and value. Market participants invest considerable amounts in technology that can reduce latency within and between front-office trading systems, because any delay between identifying a trading opportunity and execution can cost substantial amounts of money.

Whilst front-office developments lead the fight against latency, related administration and management systems need to "speed up" to prevent lag between trading and the processing and analysis of it. Regulators are also concerned about the potential risks of unequal trading speeds between market participants. Whilst it remains to be seen to what extent this area can be successfully regulated, as a minimum regulators will require administration, analysis and risk management systems which are "fast" and capable of effectively managing risk in the front-office. This is also fuelling spend and development driven by a need for reduced latency.

Suppliers are focussing on developing low latency targeted technology, networks and related consultancy services, and talk with a glint in the eye of the "competitive war" between their customers which is fuelling increased spend in the middle of otherwise unpredictable markets. In the words of Joseph M. Mecane of NYSE Euronext: "It's become a technological arms race, and what separates winners and losers is how fast they can move".

The financial services sector isn't alone in grappling with latency. Due to the power of the internet, many services are now provided to consumers online. For online consumers, the main differentiator between products is now often only the quality of user experience, including speed of response, and latency issues are becoming increasingly important. In online gaming, for example, gamers are acutely aware of the need to have fast, constant and predictable access to games and latency between gamers can confer unfair advantages on those with faster access.

2. What is Latency?

'Latency' is the time taken for data of a set size (a "packet") to cross from the sender to the receiver and is measured by the time taken for a data packet to be sent to its destination and a response to be received (a "round-trip"). Latency is present in any transmission of data, whether the distance involved is a few centimetres (e.g. between components of a computer), a few metres (e.g. between computers in the same room) or thousands of miles (e.g. over the internet). The two key technical challenges are: (i) to reduce latency, and (ii) to ensure transmission times are consistent, so data can be both fast and predictable. The causes of latency can be grouped into 3 broad categories:

i. Propagation latency

This is the time it takes for a signal to travel from one end of a communication link to the other. There are two factors that determine propagation latency: distance and speed. Copper wire and fibre optic cable have differing properties but carry a signal at roughly 67% the speed of light, which provides the following propagation latencies:

|

Length of cable |

Single-trip delay |

Round-trip delay |

|

1m |

0.000005 ms |

0.00001 ms |

|

1km |

0.005 ms |

0.01 ms |

|

10km |

0.05 ms |

0.1 ms |

|

100km |

0.5 ms |

1 ms |

|

1,000km |

5 ms |

10 ms |

|

10,000km |

50 ms |

100 ms |

1 Second = 1000ms

A straight-line communication link between New York and London of around 5,600km would therefore have a propagation latency of approximately 28ms one-way and 56ms round-trip.

Wireless methods of transmission (commonly radio waves) travel at faster speeds depending on the frequency and relative degree of "interference" but signals can be intermittent and the distances involved are usually far greater than with cable.

Propagation Latency can be minimised by finding the fastest method of transmission and the shortest distance between communicating devices. Amongst other developments, this has led to the rapid development of co-located data centres allowing market participants to reduce the distance and increase the round-trip speed.

ii. Transmission latency

Transmission latency is the delay experienced in transmitting quantities of data across a communication link. Every communication link has a "speed" which measures the amount of data per second the link is capable of transferring (e.g. 10 Mbit/sec). The slower the communication link, the longer it takes to send data across it, and the higher the transmission latency. Conversely, the faster / higher capacity the communication link, the quicker the data is sent, and the lower the transmission latency.

iii. Processing latency

Processing latency is the delay caused by IT hardware and software interacting with a data packet. All hardware and software which interacts with a data packet being sent or received causes processing latency.

|

|

Sources of Processing Latency |

|

Application Latency |

Operating System and Software |

|

Hardware Latency |

CPU, Memory and Data Storage |

|

Network Latency |

Protocol overheads, firewall, encryption and security checks |

|

Interface Latency |

Buffering, routing, framing, packetisation, serialisation and fragmentation |

Suppliers continue to reduce processing latency through improvements and advances in hardware and also through the improvement and optimisation of software and networking protocols.

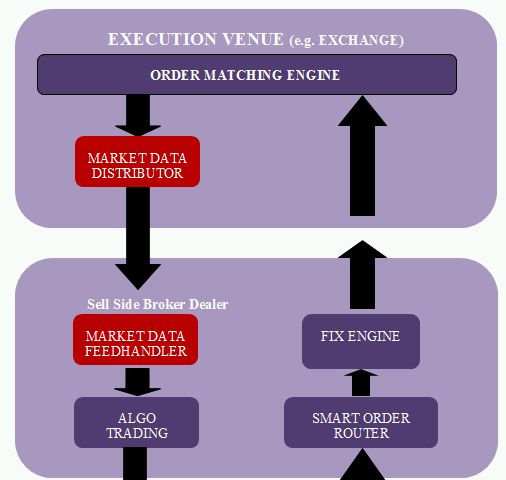

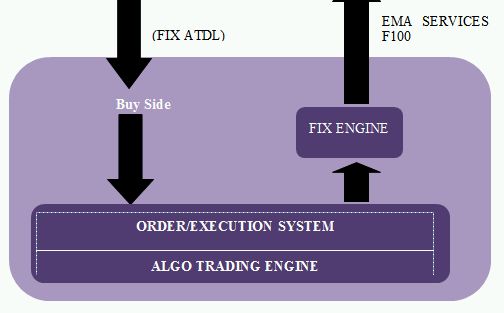

iv. System Example

The front-office round-trip, from receipt of data through to identification and execution of a transaction, will involve multiple pieces of hardware, software, connections and internal and external platforms. Below is a simplified diagram of the route an order might take. Latency may arise at each stage of the round-trip, in terms of: (i) propagation latency arising across each cable involved, (ii) transmission latency in each communication link; and (iii) processing latency within all hardware and software involved.

What are the legal implications?

Traditionally customer focus was to ensure certainty of network and service from a supplier over a lengthy commercial term for the lowest rates possible. A customer might simplify service management by utilising as few suppliers as possible, obtaining volume discounts in return for security of tenure for the supplier. Such contracts might include technology refresh clauses, however because the focus was on certainty and price, the refresh cycle tended only to oblige the supplier to match the "leading edge", following the "bleeding edge" by as much as 2-5 years.

Where latency is an issue the commercial risks and approaches are changing. First, a fractional delay can be as critical as a system failure and contracts must reflect this. Secondly, given the value of low-latency systems it is now commercially necessary to update systems at a rate that closely mirrors the "bleeding edge". Where latency issues are paramount, revised approaches are necessary and we set out examples of these below.

Supplier Term

Suppliers and components may need to be replaced at much more frequent intervals than our traditional contracts anticipate. Few suppliers can claim to be at the bleeding edge in all parts of a low-latency system and if a customer seeks to be "fast", it is not usually possible to stick with a small number of suppliers. Customers must continually shuffle the pack of suppliers, introducing new suppliers as their technology rises to the front, whilst dropping incumbents. Overarching master services agreements ("MSAs") are still useful, however the customer must retain the flexibility on short notice to drop or acquire services, hardware, software and connectivity. Traditional supplier contracts ensure longevity of a relationship in return for discounts on services with for example minimum "initial terms". Customers will need to resist such terms by including rights to terminate for convenience at short notice with pre-agreed transition services to ensure certainty of service and smooth changeover. Suppliers may argue for higher pricing in return for such flexibility, however this is the way the market is moving, competition amongst suppliers is high and flexibility can now be more valuable than getting the lowest possible charges.

Customer Policy

Customers often adopt a supplier management policy, prescribing standardised commercial and legal approaches to all key contracts in the round-trip to ensure suppliers can be managed in a co-ordinated manner. This involves working closely with IT to ensure that all contracts relating to components or services which have an impact on latency, no matter how "small", are identified at the outset so that standardised contractual terms can be imposed as appropriate. Standardised terms will generally include the issues set out below.

Supplier Relationship

Customers need to engage with more suppliers more often, continually trialling and testing new components and if a decision to implement is made, rapidly installing them. Customers must therefore engage with suppliers contractually as early as possible. Simple trialling agreements may be replaced with comprehensive MSAs incorporating detailed trialling and testing clauses, such that framework terms can be negotiated at the outset when the customer has more negotiating power rather than "on the hoof" when time is critical after a decision to purchase a component has been made.

As technology moves further into the front-office some customer relationships with suppliers are becoming deeper and stronger. Customers are working more closely alongside key suppliers in development, for example, assisting in trialling components in return for a period of exclusivity, which can afford a customer a chance to get ahead. Contractual terms dealing with such "partnership" arrangements and trials may also need to be included in MSAs with key suppliers.

Supplier Incentivisation

No matter how small a component, if it forms a part of the round-trip there is potential for latency to arise within it and it can become critical. The commercial reality is that the loss caused by a rise in latency in a particular component, can be far in excess of the amount of incentive or income at issue to the supplier. Therefore supplier SLAs, service credits and damages are unlikely to afford an incentive to the supplier or recompense to the customer equal to the losses. We are seeing a number of different approaches to bridging this gap including:

- contracting with specialist latency-focussed suppliers that: (i) offer latency-specific services with a higher level of service; and (ii) accept a greater share of the "latency risk", although of course this may come at a price;

- paying for the supplier to provide higher levels of service and accept more risk. Meaningful amounts of money must be involved to make the supplier "jump" and constant management and updating will be necessary to ensure the incentivisation remains effective; and

- where a commercial model is difficult to re-engineer, customers may introduce competitive tension by for example installing alternative available suppliers, components or systems to sit alongside the incumbent, replacing traditional regular fees with the introduction of a pay-per-use model. If alternative connections are in place then a customer can carry out a continual competition between suppliers whilst also keeping cost to a minimum. An alternative might be to have other suppliers ready in waiting on pre-agreed MSA terms. This is of course also a useful form of risk mitigation.

Specificity

The devil is in the detail. In many cases lawyers focus on the "front end" and IT technicians on the schedules, which leads to a lack of focus on the IT-specific risks. Where latency is an issue, lawyers and commercial teams should consider using the highest degree of specificity possible, especially in relation to technical descriptions, to ensure that a component is clearly warranted to perform to the required standard. In addition, this mantra applies to all areas of the contract: for instance integration and co-ordination between each supplier and governance by customer, needs to be specifically captured in each contract. Whilst overarching obligations are useful, time spent defining specific services in detail to ensure clear differentiation of responsibility, alongside matching, reporting, meeting and governance procedures across each and every applicable contract is time well spent.

Continual Improvement

In addition to traditional maintenance services, considerable day-to-day resources will need to be focussed on monitoring, testing and implementing continual changes to improve system performance and exploit new opportunities. Often continual improvement services are best managed by having small groups of developers working together within a framework similar to that employed by teams working on an agile development project. Meetings must be regular and reporting lines clear. Individuals are allocated specific issues to consider within a timeframe and then given freedom to consider potential solutions before reporting back. Good ideas are quickly identified and resources allocated quickly to developing appropriate solutions within a very short timeframe, often in the same day. The governance and reporting framework is the means by which the process is controlled.

Software Licensing

Generally software contracts require licensors to warrant that software will offer the functionality and performance specified in standardised documentation and firstly via a "free" warranty and subsequently via maintenance services, that the software continues to provide the functionality specified. The supplier will be obliged to repair bugs and provide fixes where errors occur within given timescales. Where software is involved in providing "latency services" however an additional focus is also required on the exact processing speed of the software, and it is unlikely that standard supplier documentation will offer a customer sufficient contractual comfort on these points. As a minimum an additional contractual term should be added obliging the supplier to ensure that the software functions within the required processing speeds throughout the contract term and an appropriate service level should be included in the maintenance provisions to ensure the supplier incentivised to maintain or decrease the processing speed during the term of the licence. This can be complex as, for example, the methodology for measurement will need to take data input levels into account as volume can impact on processing speed. This obligation will form a critical part of testing and acceptance procedures, with the licence and associated payments only commencing if and when the required processing speeds are achieved.

Latency Service Levels Agreements ("SLA")

The primary legal task is to work with IT to pin down the key latency risks. These risks should be covered by clear measurable obligations with standards set at the right level, appropriate service credits, and a performance floor below which the supplier is deemed to be in material breach. When considering latency, the key metrics are likely to focus on both consistency and speed. It can be difficult to agree upon a methodology for measuring latency and this will require detailed attention and updating throughout the contract. What is perhaps different is the size and complexity of affected systems, the degree of detail involved, the speed of maintenance services and the continual need for change. Key points in drafting a latency-specific SLA include ensuring that:

- service levels are comprehensive and cover the whole system such that all the service levels, for all the components together, create one effective service level for the whole round-trip;

- service levels for each component tie precisely into each other such that at the point where one service level ends, the next one in the chain begins;

- the SLA reflects the role that the relevant component plays within the entire round-trip. A failure to perform by one small link in the chain may have multiple knock-on effects, which lead to rises in latency. Each contract must reflect its part within the bigger picture, and this can be done by detailing the exact role of the relevant component, and by measuring the impact of any failure in context of the whole round-trip;

- the SLA is as flexible as possible, including an ability to allocate all available service credits to problem areas quickly so as to focus supplier resources at the point which is slowest at any given moment; and

- SLAs must be anticipated to improve quickly, and customers may need to ensure suppliers accept regular upward reviews.

Conclusion

As technology has reached the point at which it can effectively perform most functions that are required of it, performance, including the degree of latency experienced, increasingly becomes the key differentiator between services. In particular industries, such as those trading in financial services, latency is already a key focus but it is likely that this focus will spread to many other sectors. Lawyers contracting for technology in those sectors already affected heavily by latency are familiar with the issues and are re-thinking traditional IT contracts to suit the new environment. At the very least, we suggest that lawyers contracting for technology in other sectors add a new question to their standard pre-contract commercial checklists and ensure they understand whether or not latency is an issue before reviewing the next contract. As long as lawyers are ready to adapt with the results of this re-think, they will be able to provide real value to their commercial clients. Watch this space.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.