This blog: briefly introduces the technical and business context of AI as a Service ("AIaaS") (para. 1); works through a simple introductory example (para. 2); and considers some of the attendant legal issues (para. 3). It is an extract from v4.0 of our Legal Aspects of Artificial Intelligence White Paper, available here.

1. The AIaaS platform ecosystem.

For all its technical novelty, AI is no exception to the trend for rapid commoditisation in cloud services. The big cloud vendors (Amazon Web Services, Microsoft (via the Azure cloud platform) and Google (via Google Cloud Platform among a handful of others) offer an expanding range of standardised, pre-configured AI-enabled services. For example, services which enable app or website owners to deploy chatbots; financial services and insurance companies to detect online fraud; and research scientists to derive insights from unstructured medical data.1

Complementary trend: Services-Oriented Architecture ("SOA") and microservices. The decline in popularity of monolithic software architectures and the emergence of SOA and microservices is a complementary trend, which has also stimulated the adoption of cloud services like AIaaS. In monolithic software architectures, an application is built and deployed as a single unit. By contrast, SOA and microservices offer software applications composed from small, discrete units of functionality – for instance, a text translation tool or an image recognition function. For software vendor Red Hat, SOA and microservices are "widely considered to be one of the building blocks for a modern IT infrastructure"; their rise makes it cheaper and easier to build, integrate and deploy AIaaS functionality within a larger application.2

Advantages of commoditised AI services. The commoditisation of AI functionality in AIaaS can offer businesses significant advantages:

- avoid the upfront capital cost of IT infrastructure required to deploy AI and ongoing maintenance;

- 'pay as you go' model helps avoid inefficiencies in underutilised hardware/software investments;

- avoid need to train up or hire specialist personnel;

- pre-trained AI models can be used 'out of the box' or with minimal additional training or configuration; and

- ease of integration with other elements of a business's cloud-hosted IT stack.

2. AIaaS: simple introductory example

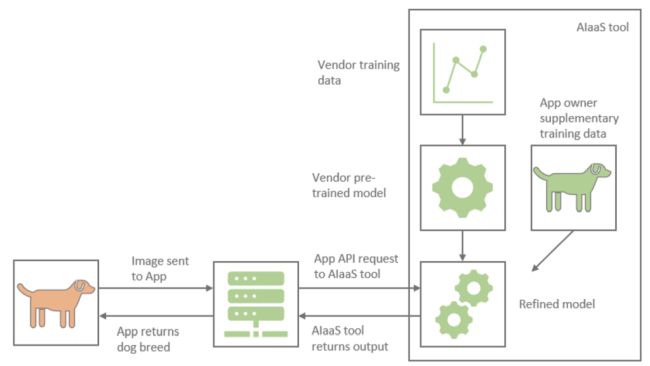

Example: image recognition app that uses AIaaS. Figure 1 below shows, as a simplified example, a mobile app that enables a user to identify a breed of dog by taking a photo in the app. The app uses a cloud-based AIaaS image recognition tool.

Figure 1: An app uses an AIaaS image recognition tool to identify dog breeds

In the example in Figure 1, the end user takes a photo of a dog using the app on a mobile device. The app then submits the image to a cloud-based AIaaS tool for analysis via an API request.3 The image is then processed by a model which has been specifically calibrated to recognise different breeds of dog (the "refined model").

The app developer has taken advantage of the tools within the cloud-based AIaaS platform it uses to develop the refined model quickly and cheaply, instead of starting from scratch. The app developer began by using a pre-trained image recognition model 'off the shelf' in the AIaaS platform. The pre-trained model had been trained on generic public datasets:4 it worked well as a generic image recognition tool, but lacked sufficient dog breed data to operate effectively as a dog breed classification tool. The app developer decides to fine-tune the pre-trained model with supplementary training data – in this case the app developer's proprietary database, consisting of several thousand images of dogs, each labelled according to the specific breed. Having been trained on this supplementary data, the refined model can determine the dog breed in a given input image with a satisfactory degree of accuracy and reliability.

This method of fine-tuning a generic model using specialist data is known as 'transfer learning' and can be distinguished from both 'full training', where a model is initialised with random weights and trained entirely on the app developer's data, and using a model 'off the shelf', where the pre-trained model is used with no additional training data.

The example in Figure 1 is an oversimplification and, depending on the app architecture choices the app developer makes, the app can be structured in many ways. For instance, more or less of the app could be deployed in the cloud. Additional microservices could be bolted on to the app to add extra functionality as the platform grows: e-commerce functionality (pet food, pet accessories, etc.) or social media integrations could be added, for instance. In this way, the AIaaS element of an app would form part of a larger whole.

3. AIaaS: legal issues

Cloud vendor's standard terms. Consistent with the commoditised nature of AIaaS, customers using popular cloud-based AIaaS platforms may not be able to negotiate commercial and legal terms with the vendor, except for the most significant projects – either in terms of the value of the deal or its novelty or strategic importance for the vendor. If this is the case, the operational risk the customer takes on can often be underappreciated. Whether a customer's entire application or business system is hosted by a third-party cloud vendor, or only a small but important part of it, unfavourable terms (e.g. a right for the vendor to terminate for convenience) could lead to significant business disruption for the customer. If it is not possible to negotiate terms, the customer could look to mitigate risk operationally – e.g. estimating time and cost required to implement a system with an alternative vendor.

AIaaS and privacy issues. As with cloud services generally, cloud-hosted AIaaS has been affected by the fallout from the CJEU's 2020 'Schrems II' decision. The issues surrounding international transfers of personal data in AIaaS are much the same as for other areas of cloud computing with an international transfers element. In AIaaS, however, the privacy risks are exacerbated by stricter rules that apply to automated decision-making in the GDPR (in both its UK and EU flavours). Where an AIaaS processes personal data, additional disclosures may be required in privacy policies. By Art. 13(2)(f) of UK/EU GDPR, this would include "meaningful information about the logic involved" in the AIaaS. However, because the customer has outsourced core AI aspects to the AIaaS vendor, the required information may not be readily available. The risks associated with this rather convoluted compliance position are increased if the AIaaS vendor asks (as they often do) that the customer give a contractual commitment to provide all necessary privacy information to the relevant data subjects. The implications of the general prohibition on automated individual decision-making in Art. 22 UK/EU GDPR are considered in more detail at D.25 of the White Paper.

Automated fairness and bias mitigation tools. The big cloud vendors now offer pre-packaged tools as part of their AIaaS offerings which aim to help users improve fairness and mitigate bias in AI modelling. One example is Microsoft Azure's Fairlearn product.5 Fairlearn is a suite of tools which assess fairness-related metrics in AI models and a range of algorithms which can help mitigate unfairness in AI models. Similarly AWS's SageMaker Clarify product aims to help users identify potential bias both in pretraining data and when an AI model is used in production.6 SageMaker Clarify "also provides tools to help you generate model governance reports that you can use to inform risk and compliance teams, and external regulators."7

While fairness toolkits such as these may serve as a useful starting point for addressing fairness and bias issues in the context of AIaaS, they are not (as the big cloud vendors generally make clear) a one-stop-shop for compliance. Microsoft notes in a 2020 white paper on Fairlearn and fairness in AI that "prioritizing fairness in AI systems is a sociotechnical challenge. Because there are many complex source of unfairness... it is not possible to fully "debias" a system or to guarantee fairness".8 Similarly, AWS states that "the output provided by Amazon SageMaker Clarify is not determinative of the existence or absence of statistical bias".9

Footnotes

1. See, for example: AWS, 'Machine Learning on AWS' https://tinyurl.com/27p87v2n; Azure, 'Azure Cognitive Services: Deploy high-quality AI models as APIs' https://tinyurl.com/2zpd4zuy; and GCP, 'AI and Machine Learning https://tinyurl.com/2bk4kjem.

2. Red Hat, '6 overlooked facts of microservices', 4 February 2021 https://tinyurl.com/3ajp7yf8.

3. An API is a set of standardised rules that allows data to be communicated between different pieces of software. In this case, the app and the AIaaS tool.

4. With a common example being ImageNet https://tinyurl.com/bddwuttw, an influential image database containing around 14m images at the time of writing.

5. See here: https://tinyurl.com/2we34hse.

6. See here: https://tinyurl.com/nssuuzx9.

7. Amazon Web Services, Amazon SageMaker: Developer Guide, https://tinyurl.com/37yavf64 'Image Classification Algorithm', p.8.

8. Microsoft et al., Fairlearn: A toolkit for assessing and improving fairness in AI (22 September 2020) https://tinyurl.com/5n6shat6.

9. AWS Service Terms https://tinyurl.com/ycxdx8m3 (version dated 2 December 2022) Section 60.5

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.