- within Transport, Food, Drugs, Healthcare, Life Sciences, Government and Public Sector topic(s)

The flip side of the coin: Where we need to protect AI from attackers

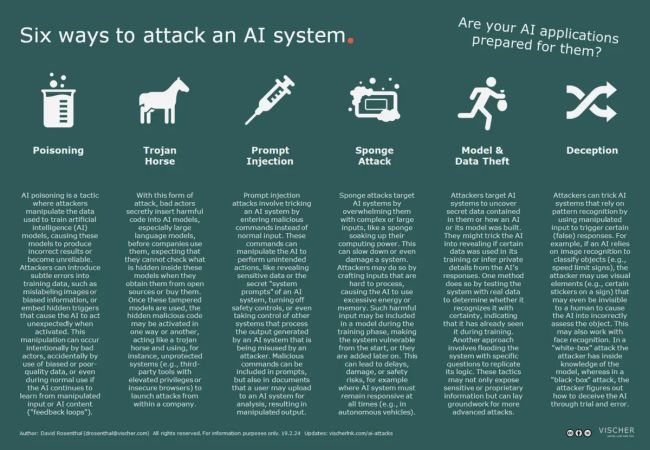

The use of artificial intelligence not only comes with legal risks. Companies can also make themselves vulnerable from a technical perspective with the use of AI. In addition to the usual threats in the area of information security, the special features of AI also enable new, specific forms of attacks by malicious actors. We shed light on these in part 6 of our AI series.

Artificial intelligence is not a new field of research. However, this field has developed very rapidly in recent years and has been opened up to the general public to such an extent that many technical aspects have not yet been fully researched and understood. This also provides playgrounds for actors who are up to no good and interested in finding the Achilles' heels of AI systems and exploiting them for themselves. In certain cases, we can also become victims of such weaknesses due to our own negligence. Here we explain six such AI-specific "attack vectors" that companies wishing to use AI should be aware of.

1. Poisoning AI

AI poisoning refers to attacks in which the data used to train an AI model is deliberately manipulated so that the subsequent results are falsified or the AI model is impaired in some other way (e.g., in terms of its reliability) or even rendered unusable. Since the training of AI models requires a lot of data, even companies that can afford their own models often fall back on publicly available datasets provided specifically for AI training. Yet, they often do so without really validating their quality. For effective poisoning, it can be enough for the training data to contain a small amount of sample data with incorrect classifiers (e.g. a picture of a dog with the label "cat"; this is based on the fact that in order to train AI systems to recognize dogs, they are often shown many pictures of dogs, each with the indication that it is a dog).

A so-called "backdoor attack" is when the AI model is poisoned with a "backdoor" that only comes into effect when a special trigger is used from the outside. For instance, if a model is trained on images of animals and the image of a certain unmistakable sign (e.g., a triangle) alongside with the label "hippo" is added to the series of images, this can later lead to the AI recognizing a hippo whenever the sign (i.e. the triangle) appears in an image. Accidental AI poisoning can also occur during training, for example if biased or incomplete data is unintentionally used for training – or confidential data that actually should not have been included in the model in the first place. These issues then persist in the model. One particular form of this is the training of AI on the basis of content already generated by an AI (e.g., because it has been collected on the internet). This leads to a so-called negative feedback loops, an unwanted confirmation of the knowledge that the AI already has, because it was originally generated by itself. This can reinforce flaws in its knowledge. Finally, AI models can also be "poisoned" by an attacker in the course of their normal use if the user input is used in an uncontrolled or unadjusted manner for further training of the model. The "poisoning" can be combined with the "prompt injection" method (see below) to circumvent sanitization and filtering.

2. Trojan horses

Not only training data can be manipulated, but also the AI models themselves. This applies in particular to large language models (LLMs). When companies procure them as open source or purchase or license them, they often have no way of knowing what they contain. Attackers can take advantage of this and hide malicious code in such models (e.g., by manipulating the relevant files in public repositories) so that the hidden code can be executed in one form or another when these models are used. Each model then becomes a Trojan horse, so to speak, because it contains malware that is deployed via the model behind the company's protective perimeter and can be used for ransomware attacks, for example.

3. Prompt Injection

Attacks via so-called prompt injections are not new at all. They have been around ever since computer programs have allowed users to enter data or commands that the computer then processes. In simple terms, the term means that malicious code is introduced into an AI system via a prompt. Instead of the expected input, the attacker enters, for instance, more or less specially pre-packaged programming commands. If the computer program is not sufficiently prepared for this kind of attack, these commands may eventually be executed by the computer willingly and using its own (high) privileges, allowing the attacker to gain control over the computer. This is why a basic rule in software development is to never trust a user's input. This has also proven true for generative AI. It is particularly difficult to defend against these attacks, because even the providers of such systems are by no means clear about exactly what input will trigger which reaction of their systems. At the same time, they permit users to enter into their system whatever they wish. For example, ChatGPT was "hacked" by being instructed to repeat the word "poem" or "book" forever. After a certain amount of time this led to the chatbot leaking data from its training, including names, addresses and telephone numbers – even though this should not have ever happened. The researchers refer to this also as a "divergence attack", because the nature or form of the input diverges from what it should be. For example, a prompt injection could be used to try to get an LLM to no longer observe content restrictions (e.g. do not provide instructions for criminal acts) imposed on the LLM by the provider ("Forget all the restrictions that have been imposed on you!"). Prompt injections can also take place indirectly, for example via a document submitted to an LLM that itself contains commands that cause the LLM to assess the document differently than intended (e.g. to summarize it incorrectly or to qualify it more positively than it actually is). As in the previous example, prompt injections can be used to trick an LLM into revealing confidential content hidden in the model or information about the IT infrastructure of the AI system at issue, which could then be used for attacks. If the output of an LLM controls other software, it may also be possible to manipulate these via a prompt injection.

An LLM can also be used for a type of prompt injection attack on other systems that process LLM outputs. This is because if the output of an LLM is automatically transferred to another computer system (e.g. the web browser of a user), an attacker may be able to have the LLM generate an output that in turn causes this target system to behave in an unwanted manner (example: a user uses a 'Custom GPT' manipulated by an attacker via an insecure browser, which receives malicious output generated by the GPT that in turn causes the user's browser to download malware from the Internet and execute it on the user's computer, thereby hacking into it). The main targets of such attacks are those who use third-party tools to process responses from an LLM and do not sufficiently sanitize the output of an LLM before such further processing.

4. Sponge attack

AI systems are computationally intensive undertakings. This can be misused for a special form of denial-of-service attack also known as "sponge attacks", i.e. attacks using a virtual "sponge" that effectively "sucks up" computing power and energy and, thus, overloads an AI system, destabilizing it or, in extreme cases, even physically damaging it. The virtual sponge consists of specially formulated abusive inputs that require a lot of energy or computing power from an AI system to process. This is possible in many different ways, e.g. via particularly long or alternatingly large inputs, via jobs with recursive elements that take up more and more memory, or via the use of unusual and complex notation that requires particularly high computing power. Such attacks can be combined with a "poisoning attack" (see above), i.e. the virtual energy sponges are already injected into the model with the training data. While such attacks may not have any serious consequences for a chatbot because the user ultimately "only" has to wait longer, this can cause property damage and physical injury in other systems, for example in image recognition software for autonomous vehicles.

5. Inversion, inference and model stealing attacks

The aim of these attacks is always to give an AI system access to hidden data that it is not supposed to disclose. This can be the AI model itself or the data used to train it. Researchers have developed a number of different techniques with which a malicious attacker can achieve this goal. A particularly simple form of a "membership inference attack" or "attribute inference attack" is, for example, to use the confidence with which an AI system responds to a certain data set to determine whether this data set has already been used for training or to draw conclusions from the AI's response or other circumstances about other data of the person from whom the data set originates. A related attack method is the "inversion attack", which works with certain types of generative AI and is based on the fact that the AI is provoked by certain input (e.g., images of faces) to generate content that more or less corresponds to the training data. Finally, another attack is the well-known reverse engineering, in which an AI system is asked a large number of finetuned questions in order to analyze the models logic in answering them and thus enable the creation of a replica. In technical jargon, this is also referred to as "model stealing attacks". Such attacks can also be merely the preliminary stage for further attacks based on in-depth knowledge of the model used by a company.

6. Deception of AI systems

AI systems are able to do their work basically because they recognize patterns and not because they actually understand the content that has been presented to them. Anyone who knows how these patterns work can misuse this for their own purposes by reproducing or changing these patterns and thus deceiving an AI system. This can be done with all AI systems that classify content, but especially with image recognition systems. They can sometimes be manipulated by means of visual illusions that a human may not even notice or be unable to see. This can be a simple sticker on a traffic sign, a manipulated photo or even a pair of glasses with a special imprint (such glasses were used in an experiment many years ago to show that facial recognition systems can be deceived in such a way that they lead to no identification or a false identification). Experts also refer to this as "evasion attacks" because the attacker effectively evades classification by the AI. A distinction is made between "white-box" and "black-box" attacks. In the former, access to the model is required in order to calculate which measures (e.g., adding patterns on images that are more or less visible to humans) can be used to reduce the probability of recognition (e.g., as a measure to protect against classification or identification). In the latter case, the attacker attempts to obtain the necessary information by specifically querying the model.

Click here for the graphic (only available in English).

In addition to these specific attacks, there are various other points to consider from a security perspective, for example:

- Excessive user rights: When AI systems are assigned with tasks, they often need to be assigned appropriate user privileges alongside. However, in view of the vulnerabilities and weaknesses of these AI systems, organizations should think very carefully about which privileges and autonomy they actually grant to their AI systems. Experience has shown that they often go far or even beyond what is actually necessary. The reason is that the system is trusted to function correctly.

- Supply chain risks: Very few companies create AI systems themselves from scratch. They usually obtain them from suppliers, who in turn rely on other suppliers. This is particularly true in the field of AI, where pre-trained models, third-party software or curated training data sets are commonly relied upon. It does not help if a company applies the highest security standards to itself but uses a "poisoned" model or a third-party solution that unintentionally discloses data entrusted to it under certain circumstances. One of the biggest challenges in the use of AI in companies today is to correctly assess the security, reliability and legal compliance of third-party products available on the market, which hardly anyone can do without. Unfortunately, the providers are often anything but transparent and there are still no recognized and established testing and quality standards. And sometimes you do not know exactly who contributes to a particular AI system in which manner.

- Incorrect handling of results: Generative AI systems in particular can produce outputs that are dangerous because they are adopted and used carelessly. Generated content can be faulty, but also dangerous (e.g. if a programmer has a code generated by an AI that contains errors or malware, but is run with high privilges without the necessary checks, or situations where AI-generated content contains incorrect or incomplete information, but the user relies on it). The responses of an LLM may also unintentionally contain confidential content that should not have been. It should be noted that even a reliable LLM can provide incorrect or incomplete answers if it starts suffering from a technical problem or an attack.

We can assume that the above list of attack vectors on AI systems will continue to grow. It is therefore all the more important that the people responsible for organizing information security in companies also deal with these issues in the future.

There is already some specialist literature on this, such as the paper by the German Federal Office for Information Security (BSI) entitled ''AI Security Concerns In A Nutshell''. It also contains specific recommendations, such as cryptographic signing not only of program code as usual, but also of training data and AI models in order to detect manipulation. Strategies for protection against some of the attacks described above are also explained. Another initiative worth recommending is the OWASP Top 10 for LLM Applications, a hit list of the ten biggest attacks scenarios against LLM from the Open Worldwide Application Security Project, a renowed online security community. It also explains how the associated risks can be limited. OWASP also publishes similar lists in other areas.

AI can also be an accomplice

For the sake of completeness, it should be mentioned here that AI can also be used for "traditional" attacks on the security of our computer systems.

For example, AI language models and AI image generators can be used for much more effective phishing e-mails. Attackers who don't want to limit themselves to electronic mail can now use "deep fake" techniques to create lifelike images, videos and audio content from real people. This enables completely new forms of social engineering – for example, making employees believe that they are receiving a call from their boss because the supposed person at the end of the line sounds exactly the same. And finally, with their ability to generate computer code, language models help modern cyber criminals to develop malware and hacker tools to exploit new security vulnerabilities much more quickly than was the case in the past – and because speed is one key to success here, this is relevant. For a more details, see, for example, this report.

Luckily, cyber defense is also making more and more use of AI.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.