AI in the company: 11 principles – and an AI policy for employees

Companies need rules when using AI. But what should they be? We have worked through the most important requirements of current law and future AI regulations and drafted 11 principles for a legally compliant and ethical use of AI in the corporate environment. We have also created a free one-page AI policy. This is part 3 of our AI series.

Many call for more regulation of artificial intelligence, even if opinions differ as to what really makes sense in that regard. You should be aware, though, that a lot of rules already exist and further regulations are to be expected. If a company wants to use AI in a legally and reputationally sound manner, it should take into consideration the following three elements:

- Applicable law: In daily business, thefocus will be on data protection law, with some aspects of copyright law and laws against unfair competition. Depending on the industry, there are also rules concerning official and professional secrecy and industry specific regulations dealing with the outsourcing of business functions to IT service providers (which is the case for most AI applications). Contracts may contain further restrictions, for example on what a company may use the data from a customer project for, even if data protection does not apply due to the lack of processing of personal data.

- Future regulation: Most nowadays focus on the European Union's AI Act. It provides that a number of activities are prohibited (e.g. emotion detection at the workplace), it sets forth a number of rules for providers of "high-risk" AI systems (e.g. concerning risk management, quality assurance and product monitoring) and contains some obligations for certain other AI systems (e.g. transparency requirements re deep fakes). For most companies, it will be of less importance, at least if they do not offer or develop AI systems, but certain obligations will also be imposed on users. Companies in Switzerland should also take a look at the Council of Europe's planned AI Convention, as it will likely also have to be implemented in Switzerland one day; it will probably have a significant influence on AI regulations in Switzerland. We believe it concisely sums up the key principles that also underlie the AI Act and that are really important (the second draft is currently available). We will have a separate post on the AI Act as part of our blog series.

- Ethical standards: We refer to them as supra-legal requirements to which companies submit themselves when dealing with AI, for example by going further in terms of transparency than the law (especially data protection law) currently requires. These requirements are naturally different for every company, as there are no universal ethics for AI. In practice, it is ultimately all about the expectations that the various stakeholders (employees, customers, the public, shareholders, etc.) of a company have as to how it should behave in certain matters. Each company must discuss, establish and define for itself what is appropriate here. What may be expected from a large corporation is not necessarily required for a start-up or SME. Advice on how to operationalize AI ethics in the context of a compliance system this can be found here (only in German) and a webinar here; both relate to data ethics, but the approach is the same in the case of AI.

Eleven principles for the use of AI

We have put together the most important points from all three areas in 11 principles. These include not only guidelines on how AI can be used correctly in the company, but also measures to ensure compliance with the rules and the related management of risks (we will address the assessment of the risks of AI projects and issues of AI governance in further articles in this series, i.e. the procedures, tasks, powers and responsibilities that should be regulated in a company to keep developments and related risks under control).

These 11 principles are:

- Accountability: Our organization ensures internal accountability and clear responsibilities for the development and use of AI. We act according to plan, not ad-hoc, and keep records of our AI applications. We understand and comply with legal requirements.

- Transparency: We make the use of AI sufficiently transparent where this is likely to be important for people in relation to their dealings with us, for example where they would otherwise not be aware that they are interacting with AI, where AI plays a significant role in important decisions that affect them, and in the case of otherwise deceptive "deep fakes".

- Fairness and non-harm: Our use of AI should be reasonable, fair and non-discriminatory for others. We pay attention to accessibility, a level playing field for us and those affected and to avoiding harm wherever possible – including to the environment. We are more cautious when it comes to vulnerable people.

- Reliability: We ensure that our AI systems work as reliably as possible and achieve the most correct, predictable results. We take precautionary measures for the event that they do not do so.

- Information security: We take measures to ensure the confidentiality, integrity and availability of AI applications and their information (including personal data, own/third-party secrets). We carefully regulate cooperation with third-party providers.

- Proportionality and self-determination: We only use personal data where necessary and – where appropriate – leave the decision as to whether and to what extent AI is used to the people concerned. If AI is involved in important decisions, we check whether we must or should provide for human intervention for those affected.

- Intellectual property: We observe copyright and industrial property rights in our use of AI and only use content and processes for which we have the necessary authorization. We also protect our own content.

- Rights of data subjects: We ensure that we can grant data subjects their rights of access, correction and objection, despite of us using AI.

- Explainability and human supervision: We only use AI systems that we can understand and control and that meet our quality requirements. We monitor them to detect and rectify errors and undesired effects.

- Risk control: We understand and manage the risks associated with our use of AI, both for our organization and for individuals. We update our risk assessments on a regular basis.

- Prevention of misuse: We implement measures to combat the misuse of our AI applications. We train our employees in the correct use of AI.

Each of these principles is described above only in an abbreviated manner. For each of them, we explain in a separate paper for our clients what this means in more detail and in more specific terms and how the company should behave accordingly and why. We have described the individual underlying legal sources with references to the applicable Swiss law, the GDPR and the draft of the AI Convention so that it is clear what already applies today and what is still to be expected. The AI Act will follow.

The paper can be obtained from us. We use it when we work with our clients in workshops to develop their own AI frameworks and regulations, i.e. it serves as a basis for an internal discussion about the appropriate guidelines that the company wants to set for its own use of AI in everyday life and in larger projects. For such a discussion, we recommend holding a workshop with various stakeholders in the company in order to define a common understanding of the use of AI in the company. This is important because, in our experience, guidelines are needed according to which AI projects are implemented and also to ensure predictability for those who want to carry out these projects. Our paper can then be incorporated directly into a more detailed directive or strategy paper with the necessary adjustments. This usually occurs in three steps:

- This paper (or other preparatory work) raises the questions that arise when it comes to AI and law and ethics, for example the question of transparency or the self-determination of the persons concerned. The aim here is to ensure that the stakeholders in the company understand the outside requirements and expectations to form a structured discussion.

- On the basis of these questions, a company must determine in a second step which answer to each of these questions suits the company and is expected of it by law and beyond, for example how far it wants to go in terms of transparency. This is where the company's own standards or values are defined in relation to the various questions or topics. It will also typically result in framing those values or aspects that are particularly important to the company. This is important because those who later want to carry out AI projects need to know the standards by which their activities will be measured (foreseeability)

- On the basis of these standards or values, specific rules can then be defined if necessary (i.e. what should be allowed, what not and what only in exceptional cases) and questions can be defined on the basis of which projects can be quickly assessed as to whether they bear particular risks (and therefore need to be examined in more detail) and, if not, whether they provide for any "red flags" that require closer examination. We have developed such "red flag" questions for our risk assessment tool "GAIRA Light", for example. They are available here. This can take some burden off the legal, security and compliance functions by allowing the business to assess for itself whether more in-depth reviews are required for their projects as part of a multi-staged triage procedure.

Of course, the standards and rules include not only substantive issues (i.e. which behavior, functions or other aspects should be allowed in the context of AI projects), but also formal issues (i.e. how can AI projects be assessed and approved as quickly as possible in terms of law, safety and ethics). We already cover them in our 11 principles.

On one page: A policy for using AI at work

Alongside these regulations for AI projects, an organization should not simply prohibit its employees from using AI tools, but should actively make them available where possible. In this way, it can control and regulate their use much better. As we showed in part 2 of our blog series, even the popular tools come in very different flavours, for example in terms of data protection, and a company must check very carefully what it offers to its employees (click here for part 2 of our blog series).

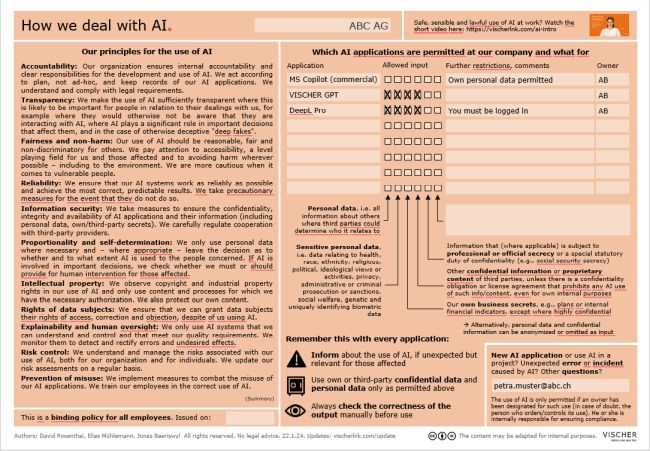

In our view, a AI policy paper does not have to be complicated. To inspire people, we have created a template AI directive for all employees that only takes up one page. It contains a summary of the 11 principles on the one hand, and on the other hand it instructs employees which AI tools they are allowed to use in the company and for which purposes and also, above all, with which categories of data (here, data protection as well as confidentiality obligations, own confidentiality interests and copyright restrictions on the use of content must be taken into account). Alongside this, we provide employees with only three core rules for using these AI tools:

- Create transparency where it is necessary

- Use AI tools only with the approved categories of data

- Manually check the output of the AI for correctness

This policy complements the short video that we presented in Part 1 of this series, which is available for free in three languages (see here).

Click here for the PowerPoint file

At the bottom right of the policy, to ensure a proper governance, we also stipulate that new AI applications are only permitted if they have an owner and such owner ensures compliance, i.e. ultimately the 11 principles (or what the company has defined as principles).

We provide our one-page policy as a PowerPoint file (in English but also here in German) so that each company can adapt it to its own needs, in particular to indicate which AI tools are permitted internally and for what purpose. We have included some examples. If you need advice here, we will of course be happy to help.

Designate an internal AI contact point

The policy also provides room for indicating the internal contact point who is responsible for handling inquiries concerning AI compliance topics. Where no one has been yet appointed, we suggest that this be either the data protection officer, the legal or compliance department. Even if the law does not formally prescribe such a body, it makes sense for a company to appoint one person or a group of persons to serve as such a contact point, which takes care of the compliant use of AI (see also our upcoming post on AI governance as part of this series).

Employees should not only be instructed to have the conformity of their new AI projects and tools checked out in advance of using or implementing them but we also recommend to have relevant mishaps and errors reported, especially with regard to sensitive applications. This helps a company to improve its use of AI and keep its risks under control.

We further recommend that our clients maintain a register of AI applications, similar to what many companies already do for their data processing activities as set out under the revised Data Protection Act and the GDPR; it can also be combined. This point is included in principle no. 1, which deals with (internal) responsibilities for the use of AI.

In our next article, we will look at how risks are assessed and documented when using AI - in smaller and larger projects. Thereafter, we will explain the governance a company should provide for AI.

The content of this article is intended to provide a general guide to the subject matter. Specialist advice should be sought about your specific circumstances.